NBER WORKING PAPER SERIES

THE WELFARE EFFECTS OF NUDGES:

A CASE STUDY OF ENERGY USE SOCIAL COMPARISONS

Hunt Allcott

Judd B. Kessler

Working Paper 21671

http://www.nber.org/papers/w21671

NATIONAL BUREAU OF ECONOMIC RESEARCH

1050 Massachusetts Avenue

Cambridge, MA 02138

October 2015

We are grateful to Paula Pedro for outstanding research management, and we thank Opower and Central

Hudson Gas and Electric for productive collaboration and helpful feedback. We also thank Nava Ashraf,

Stefano DellaVigna, Avi Feller, Michael Greenstone, Ben Handel, Guido Imbens, Kelsey Jack, David

Laibson, John List, Todd Rogers, Dmitry Taubinsky, and seminar participants at Berkeley, the Consumer

Financial Protection Bureau, Cornell, Microsoft Research, New York University, Stanford Institute

for Theoretical Economics, Wesleyan, and Yale for comments. We are grateful to the Sloan Foundation

and Poverty Action Lab for grant funding. This RCT was registered in the American Economic Association

Registry for randomized control trials under trial number 713. Code to replicate the analysis is available

from Hunt Allcott's website. The views expressed herein are those of the authors and do not necessarily

reflect the views of the National Bureau of Economic Research.

NBER working papers are circulated for discussion and comment purposes. They have not been peer-

reviewed or been subject to the review by the NBER Board of Directors that accompanies official

NBER publications.

© 2015 by Hunt Allcott and Judd B. Kessler. All rights reserved. Short sections of text, not to exceed

two paragraphs, may be quoted without explicit permission provided that full credit, including © notice,

is given to the source.

The Welfare Effects of Nudges: A Case Study of Energy Use Social Comparisons

Hunt Allcott and Judd B. Kessler

NBER Working Paper No. 21671

October 2015

JEL No. C44,C53,D12,L94,Q41,Q48

ABSTRACT

"Nudge"-style interventions are typically evaluated on the basis of their effects on behavior, not social

welfare. We use a field experiment to measure the welfare effects of one especially policy-relevant

intervention, home energy conservation reports. We measure consumer welfare by sending introductory

reports and using an incentive-compatible multiple price list to determine willingness-to-pay to continue

the program. We combine this with estimates of implementation costs and externality reductions to

carry out a comprehensive welfare evaluation. We find that this nudge increases social welfare, although

traditional program evaluation approaches overstate welfare gains by a factor of five. To exploit significant

individual-level heterogeneity in welfare gains, we develop a simple machine learning algorithm to

optimally target the nudge; this would more than double the welfare gains. Our results highlight that

nudges, even those that are highly effective at changing behavior, need to be evaluated based on their

welfare implications.

Hunt Allcott

Department of Economics

New York University

19 W. 4th Street, 6th Floor

New York, NY 10012

and NBER

Judd B. Kessler

Department of Business Economics and Public Policy

The Wharton School

University of Pennsylvania

3620 Locust Walk

Philadelphia, PA 19104

A randomized controlled trials registry entry is available at:

https://www.socialscienceregistry.org/trials/713

Code for replication is available at:

https://www.dropbox.com/s/l2m8l55o3wnuexi/AllcottKessler_Replication.zip?dl=0

Policymakers and academics are increasingly interested in “nudges,” such as information provision,

reminders, social comparisons, default options, and commitment contracts, which can affect be-

havior without changing prices or choice sets. Nudges are being used to encourage a variety of

privately-beneficial and socially-beneficial behaviors, such as healthy eating, exercise, organ dona-

tion, charitable giving, retirement savings, hand washing, and environmental conservation. The

US, British, and Australian governments have set up “nudge units” to infuse these ideas into the

policy process.

1

A growing list of academic papers evaluate nudge-style interventions in various

domains.

2

With only a few exceptions discussed below, nudges are typically evaluated based on the mag-

nitude of behavior change or on cost effectiveness. When a nudge significantly increases a positive

behavior at low cost, policymakers often advocate that it be broadly adopted. A full social welfare

evaluation could produce different policy prescriptions, however, because people being nudged often

experience two types of benefits and/or costs that typical evaluations do not consider. First, nudge

recipients often incur costs in order to change behavior. For example, people who quit smoking save

money on cigarettes but give up any enjoyment from smoking, and healthy eating might mean pay-

ing more for vegetables and giving up tasty desserts.

3

Second, the nudge itself may directly impose

positive or negative utility. For example, seeing cigarette warning labels with graphic images of

smoking-related diseases can be unpleasant, and body weight report cards could make children feel

guilty or shameful. Building on Caplin (2003) and Loewenstein and O’Donoghue (2006), Glaeser

(2006) argues that many nudges are essentially emotional taxes that reduce utility but do not raise

revenues.

This paper presents a social welfare evaluation of Home Energy Reports (HERs), one-page let-

ters that compare a household’s energy use to that of its neighbors and provide energy conservation

tips. While HERs are just one case study, they are one of the most prominent and frequently-

studied nudges. Opower, the leading HER provider, now works with 95 utility companies in nine

countries, sending HERs regularly to 15 million households. There has been significant academic

interest in HERs, including seminal studies by Schultz et al. (2007) and Nolan et al. (2008) and

many follow-on evaluations of social comparisons and other “behavior-based” energy conservation

interventions.

4

There are also a plethora of industry studies and regulatory evaluations of such

1

In September 2015, the US “nudge unit,” the Social and Behavioral Sciences Team, released results from 15

experiments, and President Obama signed an executive order that directs federal agencies to use behavioral insights

when they “may yield substantial improvements in social welfare and program outcomes” (EOP 2015).

2

One indicator of academic interest is that the book Nudge (Thaler and Sunstein 2008) has been cited more than

5000 times.

3

Of course, if the policymaker has correctly designated a “good” behavior to nudge people toward, this typically

means that the behavior change generates net benefits for the individual. However, the magnitude of these net

benefits would ideally be calculated and weighed against a nudge’s other costs and benefits.

4

Academic papers on energy use social comparison reports include Kantola, Syme, and Campbell (1984), Allcott

(2011, 2015), Ayres, Raseman, and Shih (2013), Costa and Kahn (2013), Dolan and Metcalfe (2013), Allcott and

Rogers (2014), and Sudarshan (2014). Delmas, Fischlein, and Asensio (2013) review 156 published field trials studying

social comparisons and other informational interventions to induce energy conservation.

2

programs.

5

These existing evaluations of behavior-based energy conservation programs often make policy

recommendations by comparing program implementation costs to the value of energy saved. This

approach is so well-established that energy industry regulators have a name for it: the “program

administrator cost test.” As with most evaluations of other nudges, this ignores benefits and costs

(other than energy cost savings) experienced by nudge recipients. For example, what financial

costs did consumers incur to generate the observed energy savings, for example to install improved

insulation? What is the cost of time devoted to turning off lights or adjusting thermostats? What

is the value of comfort from better-insulated homes, or the discomfort from setting thermostats to

energy-saving temperatures? Are there meaningful psychological benefits or costs of using social

comparisons to inspire or guilt people into conserving energy?

Home Energy Reports have two features that we leverage to conduct a social welfare analysis

that considers the full range of recipient benefits and costs. First, they are a private good that

can be sold. Second, the standard policy is to deliver them regularly, e.g. every two months, over

several years. These two features mean that it is both possible and policy-relevant to measure

willingness-to-pay (WTP) for future HERs in a sample of experienced past recipients. In simple

terms, our approach is to send people one year of HERs, each of which has a similar structure but

includes new conservation tips and updated energy use feedback, and then ask them how much

they are willing to pay to receive HERs for a second year. Because these people have experience

with HERs from the first year, we respect their WTP as an accurate measure of their welfare

from receiving more of them. We then use standard economic tools to evaluate the welfare effects

of the second year of HERs, weighting consumer welfare gains against implementation costs and

reductions in uninternalized externalities.

More specifically, we study a program providing HERs to about 10,000 residential natural gas

consumers at a utility in upstate New York over the 2014-2015 and 2015-2016 winter heating

seasons. At the end of winter 2014-2015, we surveyed all HER recipients by mail and phone with

multiple price lists (MPLs) that trade off next winter’s HERs with checks for different amounts of

money. We designed the MPL to allow negative WTP as well as positive WTP, as some households

opt out of HER programs even though the reports are free. The MPLs were incentive compatible

– depending on their responses, each household will receive a check from the utility and/or more

HERs in winter 2015-2016. Because the initial HER recipients were randomly selected from a larger

population, we can easily estimate the effects of HERs on energy use, which we then translate to

a value of uninternalized externalities using parameters such as the social cost of carbon.

We find that the average household is willing to pay just under $3 for a second year of Home

Energy Reports. While most people like HERs, 35 percent have weakly negative WTP – that is,

5

These include Violette, Provencher, and Klos (2009), Ashby et al. (2012), Integral Analytics (2012), KEMA

(2012), Opinion Dynamics (2012), and Perry and Woehleke (2013), among many others.

3

they prefer not to be nudged even if the nudge is free. In support of the usual revealed preference

assumption, the data suggest that WTP is a reliable measure of how much people like HERs: for

example, WTP is highly correlated with qualitative evaluations of the HERs and beliefs about

savings made possible by future HERs. We estimate that WTP equals about 51 percent of retail

energy cost savings, meaning that the remaining 49 percent represents net financial, time, comfort,

and psychological costs required to generate the energy savings. This high ratio of energy savings

to costs suggests that, leaving aside the implementation cost, HERs provide privately-useful con-

servation information and/or psychological benefits. However, this 49 percent “non-energy cost”

is not included in previous HER evaluations, nor in most evaluations of similar nudges in other

domains.

Our main estimates suggest that the second year of this HER program increases social welfare

by $0.70 per household. However, the standard approach of ignoring non-energy costs overstates

this welfare gain by a factor of five. We find the same qualitative results in a more speculative

calculation where we generalize the 49 percent non-energy cost rule of thumb to the full course of

a typical HER program: under this assumption, the typical program likely increases welfare, but

ignoring non-energy costs overstates welfare gains by a factor of 2.4.

The nudge’s welfare effects are driven down by the fact that about 59 percent of nudge recipients

are not willing to pay the social marginal cost of the nudge, including many who have negative

WTP. On the other hand, more than 30 percent of recipients are willing to pay more than twice

the social marginal cost. A natural response to heterogeneous valuations would be to price the

nudge at expected net social cost and let people opt in if they want to. In this context, however,

inertia is extremely powerful: HERs involve much lower stakes than other contexts such as health

insurance and retirement savings plans where default settings are powerful, as studied by Madrian

and Shea (2001), Kling et al. (2012), Handel (2013), Ericson (2014), and others. We show that

even under generous assumptions, an opt-in program is unlikely to enroll enough people to generate

larger welfare gains than the current opt-out policy. Instead, we train a simple machine learning

algorithm to set a “smart default” – that is, to target the program at consumers that would generate

the largest welfare gains if nudged. The smart default approach can more than double the welfare

gains, holding constant the number of nudge recipients.

These results have important but nuanced implications for energy policy. Many utilities send

HERs to help comply with regulations called Energy Efficiency Portfolio Standards (EEPS), which

require utilities to induce a specific quantity of energy savings each year. While this paper finds

that net benefits of HERs are less than previously reported, benefit-cost analyses of alternative

energy efficiency programs such as home retrofits also may suffer from systematic biases.

6

Thus,

6

One potential source of systematic bias is that actual energy savings may be different than simulation-based

assumptions; see Nadel and Keating (1991) and more recent studies such as Allcott and Greenstone (2015) and

Fowlie, Greenstone, and Wolfram (2015). A second source of bias is that according to Kushler et al. (2012), only 30

percent of energy efficiency programs measure non-energy benefits and costs such as the financial, time, and utility

4

substituting to alternative programs that have not been subjected to a complete social welfare

analysis may not be better than continuing an HER program. At a minimum, our results suggest

that there is much work to be done to correctly measure the welfare effects of energy efficiency

programs.

We are not the first or only researchers to consider the welfare effects of nudges. A handful

of previous empirical and theoretical analyses of behaviorally-motivated policies have recognized

the difference between effects on behavior and effects on welfare, including Carroll, Choi, Laibson,

Madrian, and Metrick (2009) and Bernheim, Fradkin, and Popov (2015) on optimal retirement sav-

ings plan defaults; Handel (2013) on insurance plan choice; Bhattacharya, Garber, and Goldhaber-

Fiebert (2015) on exercise commitment contracts; and Reyniers and Bhalla (2013) and Cain, Dana,

and Newman (2014) on charitable giving. There is an active literature debating the welfare gains

from cigarette graphic warning labels, including Weimer, Vining, and Thomas (2009), FDA (2011),

Chaloupka et al. (2014), Ashley, Nardinelli, and Lavaty (2015), Chaloupka, Gruber, and Warner

(2015), Cutler, Jessup, Kenkel, and Starr (2015), Jin, Kenkel, Liu, and Wang (2015), and others.

Even within these papers that are grounded in a welfare framework, however, most do not actually

implement an empirical social welfare analysis of a nudge because measuring consumer welfare can

be so challenging.

Although not a study of a nudge intervention, DellaVigna, List, and Malmendier (2012) is similar

in spirit: they point out that charitable donation appeals could increase utility by activating warm

glow of donors or instead decrease utility by imposing social pressure. They combine an “avoidance

design” – measuring whether people avoid opportunities to donate – with a structural model,

concluding that door-to-door fundraising drives can reduce welfare even as they raise money for

charity. Herberich, List, and Price (2012) use the same design to show that both altruism and

social pressure motivate people to buy energy efficient lightbulbs from door-to-door salespeople,

and Andreoni, Rao, and Trachtman (2011) and Trachtman et al. (2015) use a different avoidance

design to study motivations for charitable giving, although none of these latter three papers includes

a social welfare analysis. Avoidance designs achieve the same conceptual goal as our MPL: both

allow the analyst to observe people opting in or out of a nudge (or opportunity to donate) at some

cost. Our MPL design is especially useful, however, because it immediately gives a WTP, whereas

avoidance behaviors require additional assumptions or structural estimates to be translated into

dollars.

Section I formally defines a “nudge” and derives a formula for welfare effects. Sections II and

III present the experimental design and data. Sections IV and V present the empirical results

and social welfare calculation. Section VI evaluates targeting and opt-in policies, and Section VII

concludes.

costs discussed above. Depending on the program, these factors could bias welfare estimates in either direction.

5

I Theoretical Framework

This section lays out a simple theoretical framework that formalizes what we mean by a “nudge”

and derives an equation for welfare effects.

I.A Consumers and Producers

We model a population of heterogeneous consumers who derive utility from consuming numeraire

good x and a continuous choice e, which in our application is energy use. With slight modifications

to the below, e could also represent healthful eating, exercise, using preventive health care, charita-

ble giving, or other actions. e generates consumption utility f(e; α), where α is a taste parameter.

To capture imperfect information or behavioral bias, we allow a factor γ that affects choice but

not experienced utility. For example, γ could represent noise in a signal of an unknown production

function for health or household energy services, or it could represent a mistake in evaluating the

private net benefits of e, perhaps due to inattention or present bias. Consumers have perceived

consumption utility

ˆ

f(e; α, γ), which may or may not equal f (e; α).

e is produced at constant marginal cost c

e

and sold at constant price p

e

, giving markup π

e

=

p

e

− c

c

per unit. For another application, one might extend the model to endogenize price. In our

application, e is sold by a regulated utility that is allowed a constant markup over marginal cost,

so π

e

is exogenous and positive. In a health application, π

e

could represent mispricing of health

care from insurance. e imposes constant externality φ

e

per unit. Consumers have income y and

pay lump-sum tax T to the government.

We include a “moral utility” term M = m − µe. Following Levitt and List (2007), moral

utility arises when actions impose externalities, are subject to social norms, or are scrutinized by

others. This concept is especially appropriate for our setting, where energy production causes

environmental externalities and Home Energy Reports scrutinize energy use and present social

norms. The moral price µ can be thought of as a “psychological tax” or “moral tax” on e, as in

Glaeser (2006, 2014) and Loewenstein and O’Donoghue (2006), or as fear of future consequences

of e, as in Caplin (2003). More positive µ can also represent a moral subsidy for reducing e. To

model a moral subsidy, imagine that consumers receive utility µ for every unit of e not consumed,

up to m

s

, where m

s

> e. Moral utility is then M = µ(m

s

− e), which equals m − µe when we set

m = µm

s

. This framework can also allow moral utility to depend on consumption relative to a

social norm s: if M = m

s

− µ(e − s), this equals m − µe when we set m = m

s

+ µs. m also captures

any “windfall” utility change, if recipients like or dislike the nudge regardless of e.

Let the vector θ = {y, α, γ, m, µ} summarize all factors that vary across consumers. We assume

that utility is quasilinear in x, so

ˆ

f

0

> 0,

ˆ

f

00

< 0,

ˆ

f

0

(0) = ∞, and the consumer maximizes

max

x,e

ˆ

U(θ) = x +

ˆ

f(e; α, γ) + m − µe, (1)

6

subject to budget constraint

y − T ≥ x + ep

e

. (2)

Consumers’ equilibrium choice of e, denoted ˜e(θ), is determined by the following first-order

condition:

ˆ

f

0

(˜e; α, γ) − µ = p

e

. (3)

This equation shows that increasing the moral price µ can have the same effect on behavior as

increasing the price p

e

. However, we discuss below how a price increase vs. a moral price increase

are very different from a welfare perspective.

Two market failures can cause equilibrium ˜e(θ) to differ from the social optimum. First, γ

(imperfect information or other factors) affects choice but not experienced utility. Second, price p

e

may differ from social marginal cost c

e

+φ

e

because of the externality φ

e

and markup π

e

. In the first

best, p

e

= c

e

+φ

e

and the consumer would maximize experienced utility U(θ) = x+f(e; α)+m−µe.

I.B Nudges

The policymaker can implement a nudge at cost C

n

per consumer and maintains a balanced budget

using lump-sum tax T = C

n

. We formalize the nudge as a binary instrument n ∈ {1, 0} that changes

consumers’ γ, m, and µ. Specifically, each consumer has possibly different potential outcomes θ

n

for n = 0 vs. n = 1, in which γ, m, and µ could differ. We define Θ = {θ

0

, θ

1

} and let F (Θ) denote

its distribution. In words, a nudge provides information, reduces bias, and/or persuades people by

activating moral utility. This is intended to be consistent with the practical examples of Thaler and

Sunstein (2008), and it is closely analogous to the formal definition in Farhi and Gabaix (2015).

I.C Private and Social Welfare Effects of Nudges

We define “pre-tax consumer welfare” as V (θ

n

) = U(θ

n

) + T , and we use ∆ to represent effects of

a nudge, e.g. ∆V ≡ V (θ

1

) − V (θ

0

). The effect of the nudge on pre-tax consumer welfare is

∆V = −∆˜e · p

e

+ ∆f + ∆M. (4)

Social welfare is consumer welfare plus profits minus the externality:

W (n) =

ˆ

U(θ

n

) + (π

e

− φ

e

)˜e(θ

n

) dF (Θ). (5)

The effect of the nudge on social welfare is

7

∆W =

´

∆V − C

n

+ (π

e

− φ

e

)∆˜e dF (Θ). (6)

The first term in Equation (6) reflects the net benefit to consumers, ignoring the fact that they

must pay for the nudge through the lump-sum tax. The second term C

n

then accounts for the cost

of the nudge. The final term reflects the change in the pricing distortion.

Nudges with the same effect on behavior ∆˜e and the same cost C

n

– and thus the same cost

effectiveness – can have very different effects on consumer welfare, and thus very different social

welfare effects. Figure 1 helps present several distinct mechanisms through which demand could

shift from D

0

to D

1

, giving the same ∆˜e < 0 as the equilibrium shifts from point a to point g.

First, imagine that there is no moral utility, and the nudge only sets

ˆ

f = f, i.e. it only provides

information or eliminates bias. In this example, D

0

represents perceived

ˆ

f, while D

1

represents

true f. The nudge saves consumers money −∆˜e · p

e

(rectangle acdg), which is only partially offset

by reduction in consumption utility f(e; α) (trapezoid bcdg). To a first order approximation, the

nudge generates ∆V ≈ −

1

2

(∆˜e)

2

de/dp

e

> 0, i.e. it eliminates deadweight loss triangle abg.

Figure 1: Illustrating the Effects of a Nudge on Consumer Welfare

$

e

a

g

p

e

b

c

d

i

h

m

s

l

j

D

0

D

1

k

Now imagine that

ˆ

f = f without the nudge, and the nudge only raises the moral price from

µ

0

= 0 to µ

1

, generating the same ∆˜e. In this example, D

0

reflects consumption utility f(e; α),

8

both with and without the nudge. As in the first example, this saves consumers money −∆˜e · p

e

,

but this is outweighed by consumption utility loss shown by trapezoid acdh. In addition, moral

utility M decreases by µ

1

˜e(θ

1

), which is area ghji. In sum, the moral tax reduces consumer welfare

by the same amount as a standard tax: ∆V =

1

2

(∆˜e)

2

de/dp

e

− µ

1

˜e(θ

1

) < 0, or trapezoid agij. Unlike a

standard tax, however, the moral tax does not generate revenues – it simply reduces utility. The

welfare effect is negative even if the first-best ˜e is achieved. Alternatively, the nudge could be a

moral subsidy on every unit of e not consumed up to m

s

. In this case, consumer welfare would

change by ∆V =

1

2

(∆˜e)

2

de/dp

e

+ µ

1

(m

s

− ˜e(θ

1

)) > 0, or trapezoid aklg. More broadly, the nudge can

have unbounded positive or negative effects on ∆V unless further restrictions are placed on m.

This discussion highlights how traditional evaluation metrics can be misleading guides for policy

decisions: large behavior change ∆˜e and low implementation cost C

n

are neither necessary nor

sufficient for a nudge to increase welfare.

I.D Estimation

In the remainder of the paper, we estimate Equation (6) for a specific nudge: Home Energy Reports.

We estimate the change in energy use ∆˜e by implementing HERs as a randomized control trial. We

use outside estimates of energy use externalities φ

e

, and we estimate markup π

e

and nudge cost C

n

from pricing and cost data. To estimate the change in consumer welfare ∆V , we elicit willingness-

to-pay for the nudge. In doing this, we must assume that our experimental design correctly elicits

WTP and that consumers are “sophisticated” in the sense that their WTP for the nudge equals its

true effect on their welfare. Sections II-IV present evidence on the plausibility of this assumption,

and we formalize it before performing the welfare analysis in Section V.

II Experimental Design

The Opower Home Energy Report is a one-page letter (front and back) with two key features

illustrated in Figure 2. The Social Comparison Module in Panel (a) compares a household’s energy

use to that of its 100 geographically nearest neighbors in similar house sizes whose energy use meters

were read on approximately the same date. In the neighbor comparison graphs, “All Neighbors”

refers to the mean of the neighbor distribution, while “Efficient Neighbors” refers to the 20th

percentile. To the right of the three-bar neighbor comparison graph is a box presenting “injunctive

norms” intended to signal virtuous behavior (Schultz et al. 2007): consumers earn one smiley face

for using less than their mean neighbor and two smiley faces for using less than their Efficient

Neighbors. The Action Steps Module in Panel (b) gives energy conservation tips; these suggestions

are tailored to each household based on past usage patterns. The HERs are thus designed to both

provide information and activate “moral utility.”

9

Figure 2: The Opower Home Energy Report

If you have questions or no longer want to receive

reports, call 555-555-5555.

For a full list of energy-saving products and

services for purchase, including rebates from

UtilityCo, visit

utilityco.com/rebates .

This report gives you context on your energy use

to help you make smart energy-saving decisions.

Home Energy Report

Account number: 1234567890

Report period: 11/23/14–12/21/14

Efficient Neighbors: The most efficient

20 percent from the “All Neighbors” group

All Neighbors: Approximately 100 occupied,

nearby homes that are similar in size to yours

(avg 1,517 sq ft)

Who are your Neighbors?

How you're doing:

More than average

GOOD

Great

* Therms: Standard unit of measuring heat energy

28

All Neighbors

27

YOU

19 Therms*

Efficient Neighbors

You used than your efficient neighbors.42% more natural gas

Last Month Neighbor Comparison

You used than your efficient neighbors.81% more natural gas

This costs you about per year.$229 extra

Last 12 Months Neighbor Comparison

Efficient NeighborsAll NeighborsYou

Key:

DEC JAN FEB MAR APR MAY JUN JUL AUG SEP OCT NOV

2 0

4 0

6 0

8 0

Therms

20 14 >< 201 3

Turn over for savings

BOB SMITH

555 MAIN STREET

ANYTOWN, ST 12345

1515 N. Courthouse Road, Floor 8

Arlington, VA 22201-2909

(a) Social Comparison Module

Personalized tips | For a complete list of energy saving investments and smart purchases, visit utilityco.com/rebates.

Do you need help heating your home?

Don’t let bill trouble prevent you from keeping your home

and family warm this winter. The Low-Income Home Energy

Assistance Program (LIHEAP) can help eligible customers

pay current or past-due heating bills or help restore power

that has been shut off.

Apply for winter assistance at utilityco.com/rebates.

or call 555-555-5555.

Quick Fix

Something you can do right now

Open your shades on winter

days

Taking advantage of winter's

direct sunlight can make a dent

in your heating costs. Open

blinds and other window

treatments during the day to

capture free heat and light.

South-facing windows have the

most potential for heat gain,

and the sun is most intense

from 9 a.m. to 3 p.m.

When you let the sun in,

remember to lower the

thermostat by a few degrees.

These two steps combined are

what save money and energy.

10

$

SAVE UP TO

PER YEAR

Smart Purchase

An affordable way to save more

Program your thermostat

A programmable thermostat

can automatically adjust your

heat or air conditioning when

you're away, then return to your

preferred temperature when

you're home to enjoy it.

If you don't already have a

programmable thermostat, look

for one at your local home

improvement store. For comfort

and convenience, be sure to

program your thermostat with

energy-efficient settings.

If you need help installing or

programming your thermostat,

consult your manual or call the

manufacturer for assistance.

65

$

SAVE UP TO

PER YEAR

Smart Purchase

An affordable way to save more

Weatherstrip windows and

doors

Windows and doors can be

responsible for up to 25% of

heat loss in winter for a typical

home.

If you're comfortable doing the

task yourself, you can

weatherize your home in just a

few hours. Seal windows for

about $1 each with rope caulk,

or install more permanent

weatherstripping for $8-$10 per

window. Also, install sweeps at

the bottom of exterior doors.

A professional can help you

with this work if you prefer.

10

$

SAVE UP TO

PER YEAR

© 2012-2015 Opower

Printed on 10% post-consumer recycled paper using water-based inks.

utilityco.com/energyreports | 555-555-5555 | [email protected]

(b) Action Steps Module

Notes: The Home Energy Report is a one-page (front and back) letter including the Social Comparison

Module in Panel (a) and the Action Steps Module in Panel (b).

10

Opower has implemented HER programs at 95 utilities in nine countries. We focus on one

program at Central Hudson Gas and Electric, which serves 300,000 electric customers and 78,000

natural gas customers in eight New York counties. Like 23 other states, New York has an Energy

Efficiency Portfolio Standard, which requires that utilities cause consumers to reduce energy de-

mand by a specified amount each year (ACEEE 2015). As part of compliance with the standard,

Central Hudson had already planned a multi-year Home Energy Report program for residential

natural gas customers. Central Hudson and Opower agreed to modify the program to incorporate

this study.

Why do utilities typically send many HERs over multiple years instead of stopping after the

first HER or first year? The reason is that in practice, continued HERs cause incremental conser-

vation (Allcott and Rogers 2014). The continuing effects likely arise both because additional HERs

are a motivational reminder and because they provide new information. Indeed, 49.5 percent of

households saw their ranking relative to their mean neighbor or Efficient Neighbors change across

reports in the first year of the Central Hudson program we study.

7

Furthermore, the energy con-

servation tips change with every report. It is thus unlikely that the first HER provides the bulk

of the informational or motivational benefits, and it is not obvious the extent to which consumers

would value the first HERs vs. later HERs differently.

Figure 3 summarizes the experimental design. Starting with an eligible population of 19,927

households, Opower randomly assigned half to treatment and half to control. The HER treatment

group received up to four HERs during the “heating season” from late October 2014 through late

April 2015. Central Hudson employees read each household’s natural gas meter every two months,

and an HER was generated and mailed shortly after each meter read in order to provide timely and

relevant information. Like almost all other HER programs, this is an “opt out” program, so house-

holds continue to receive HERs unless they contact the utility to opt out. Of the recipient group

households, 525 were not sent any HERs for standard technical reasons such as not having enough

neighbors to generate valid comparisons; we did not survey these households. Some households

received fewer than four HERs for the same technical reasons.

Opower included a one-page survey and postage-paid Business Reply Mail return envelope in

the same envelope as the final HER of the 2014-2015 heating season. Figure 4 reproduces the

survey. The first seven questions were a multiple price list (MPL) that asked recipients to trade off

four more HERs with checks for different amounts of money. The responses can be used to bound

willingness-to-pay. For example, consumers who prefer “four more Home Energy Reports plus a $9

check” instead of “a $10 check” value the four HERs at $1 or more. Consumers who prefer “a $10

check” instead of “four more Home Energy Reports plus a $5 check” value the four HERs at $5 or

less. A consumer who answered as in these two examples therefore has WTP between $1 and $5.

7

These changes occur largely because of standard month-to-month variation in household energy use, not due

to conservation actions induced by the HERs. The average treatment effect of HERs is very small relative to the

standard within-household and between-household variation.

11

Figure 3: Experimental Design

Control

1. Four Reports (October 2014-April 2015)

2. First mail survey (in final Report)

3. Follow-up mail survey (own envelope, May)

4. Phone survey (June-August)

5. Next four Reports or check (later 2015)

Process

Report Recipient

19,927 households

Treatment Groups

Base group

(first mail

survey only)

Follow-up

group

1/2

1/2

2/3

1/3

The survey letters included three variations intended to remind consumers of different features

of the HERs. Figure 4 was the “Standard” version. In the “Comparison” version, the sentence

“Remember that Home Energy Reports compare your energy use to your neighbors’ use”

was added after “we want to know what you think about them” in the introductory paragraph.

In the Environmental version, “Remember that Home Energy Reports help you to reduce

your environmental impact” was added in that same place.

In a typical Opower HER program, around one percent of consumers dislike HERs enough to

take the time to opt out. If time has any positive value, this implies a negative WTP for HERs.

To correctly measure the distribution of WTP in such an opt-out program, it is thus necessary to

allow consumers to reveal negative WTP. We designed the MPL to do this, by asking consumers

to choose between “four more HERs plus a $10 check” and checks of less than $10. For example,

consumers who choose “a check for $9” instead of “four more HERs plus a $10 check” are giving up

$1 to not receive four more HERs, meaning that their WTP must be no greater than $-1. Answers

to the seven-question MPL place a respondent’s WTP into eight ranges, which are symmetric about

zero: (−∞, −9], [−9, −5], [−5, −1], [−1, 0], [0, 1], [1, 5], [5, 9], and [9, ∞).

The survey’s final question was, “Think back to when you received your first Home Energy

Report. Did you find that you used more or less energy than you thought?” This measures the

extent to which HERs caused consumers to update beliefs about relative usage.

12

Figure 4: Mail Survey

CHGE_0009_WELCOME_LETTER_SURVEYA

Tell us what you think — and earn a check for up to $10!

Central Hudson has been sending you Home Energy Reports since last fall, and we want to

know what you think about them. Would you take a moment to complete the survey below?

For each question, please ll in one box with your answer.

What happens next?

1. When you’re nished, mail the survey back to us in the enclosed prepaid envelope.

2. We will use a lottery to draw one of the rst seven questions, and we’ll mail you what you

chose in that question — either a check or a check plus four more Home Energy Reports.

Thank you!

Your participation will help us make these reports even more useful for you. If you have any

questions, please email us at HERSurvey@cenhud.com or call (845) 486-5221.

Somewhat more Much moreSomewhat lessMuch less

About what I thought

Which would you prefer?

7.

Which would you prefer?

6.

Which would you prefer?

5.

Which would you prefer?

4.

8.

Think back to when you received

your rst Home Energy Report. Did

you nd that you used more or less

energy than you thought?

A $10 check

$10

4 more Home Energy

Reports PLUS a $1 check

4

+

$1

Which would you prefer?

3.

Which would you prefer?

2.

Which would you prefer?

1.

A $10 check

$10

A $10 check

$10

A $10 check

$10

A $9 check

$9

A $5 check

$5

A $1 check

$1

4 more Home Energy

Reports PLUS a $5 check

4

+

$5

4 more Home Energy

Reports PLUS a $9 check

4

+

$9

4 more Home Energy

Reports PLUS a $10 check

4

+

$10

4 more Home Energy

Reports PLUS a $10 check

4

+

$10

4 more Home Energy

Reports PLUS a $10 check

4

+

$10

4 more Home Energy

Reports PLUS a $10 check

4

+

$10

OR

OR

OR

OR

OR

OR

OR

Account Number: xxxx-xxxx-xx-x

13

A randomly-selected 2/3 of HER recipients were sent a follow-up mail survey on May 26th,

2015. This was not part of an HER and was sent through a separate vendor, so the outbound

envelope had a different originating address than the HERs. The survey and Business Reply Mail

return envelope were identical to the first mail survey. The “base group” was not sent the follow-up

mail survey.

In June, July, and early August 2015, an independent survey research firm surveyed the entire

HER treatment group by phone. Each phone number was called up to eight times until the

household completed the survey or declined to participate. The beginning of the phone survey

parallels the mail survey, except that we used a three-question version of the same MPL that

dynamically eliminated questions whose answers were implied by earlier answers.

8

We then asked

a belief update question parallel to the mail survey and a series of additional questions to elicit

beliefs about energy cost savings and qualitative evaluations of the HERs. Appendix A presents

the full phone survey questionnaire. A condensed version is:

1. [Multiple price list]

2. Did your first Report say you were using more or less than you thought?

3. Do you think that receiving four more Reports this fall and winter would help you reduce

your natural gas use by even a small amount?

(a) If Yes: How much money do you think you would save on your natural gas bills if you

receive four more Reports?

4. How much money do you think the average household has saved since last fall?

5. How would you like the Reports if they didn’t have the neighbor comparison graph?

6. Do the Reports make you feel inspired, pressured, neither, or both?

7. Do the Reports make you feel proud, guilty, neither, or both?

8. Do you agree/disagree with: “The Reports gave useful information that helped me conserve

energy.”

9. Do you have any other comments about the Reports that you’d like to share?

8

We began by asking question 4 from the mail survey. If the respondent preferred HERs+a $10 check, we asked

question 6. If the respondent preferred HERs+a $5 check on question 6, we asked question 7, whereas if the respondent

preferred a $10 check on question 6, we asked question 5. If the respondent preferred a $10 check on question 4, we

asked question 2. If the respondent preferred HERs+a $10 check on question 2, we asked question 3, whereas if the

respondent preferred a $5 check on question 2, we asked question 1.

14

If the phone survey respondent reported that he or she had already returned the mail survey,

the phone survey skipped directly to question 3. Questions 6 and 7 were designed to measure

whether the HERs tend to generate positive or negative affect, to provide suggestive evidence on

whether HERs affect “moral utility” or act as a psychological tax or subsidy. The words “inspired,”

“proud,” and “guilty,” were drawn from the Positive and Negative Affect Schedule (Watson, Clark,

and Tellegan 1988), a standard measure in psychology. We added the word “pressured” because

we hypothesized that it might be relevant in this context.

Both the mail and phone MPLs clearly stated at the outset that they were incentive-compatible.

The mail survey stated, “We will use a lottery to draw one of the first seven questions, and we’ll mail

you what you chose in that question.” The phone survey script stated, “These are real questions:

Central Hudson will use a lottery to pick one question and will actually mail you what you chose.”

Once all survey responses were collected, one of the seven MPL questions was randomly selected for

each respondent, and the respondent received what he or she had chosen in that question: either

a check from Central Hudson or a check plus four more HERs in the 2015-2016 heating season.

9

The surveys did not state the consequences of non-response. In reality, households that did not

respond to the survey did not receive a check, and they are scheduled to receive four more HERs

in the 2015-2016 heating season.

III Data

There are five data sources: the utility’s natural gas bill data, neighbor comparisons, customer

demographic data, mail surveys, and phone surveys.

Central Hudson reads customers’ natural gas meters on very regular bi-monthly cycles: 95

percent of billing period durations are between 55 and 70 days. Central Hudson measures natural

gas use in hundred cubic feet (ccf). As we discuss further in Section V, Central Hudson uses a

decreasing block tariff, and the average marginal retail price during the post-treatment period is

$0.98 per ccf. We observe gas use for each household in treatment or control for all meter read

dates between September 1, 2013, and May 22, 2015.

The key feature of the Social Comparison Module in Panel (a) of Figure 2 is a bar graph

comparing the household’s use on its previous bill to the mean and 20th percentile of the distribution

of neighbors’ use. We observe that mean and 20th percentile for all HERs, including HERs that

control group households would have received.

Table 1 presents demographic variable summary statistics. “Baseline use” is mean use per day

across all meter read dates in the first 365 days of our sample, from September 2013 through August

2014. Hybrid auto share is the share (from 0-100) of vehicles registered in the census tract in 2013

9

Because Central Hudson needs to continue the program to satisfy regulatory requirements under the Energy

Efficiency Portfolio Standard, we placed 98.6 percent probability on the first question, on which 94 percent of

respondents chose HERs. The remaining six questions were each selected with 0.2 percent probability.

15

that were hybrids. All other variables are from a demographic data vendor and are matched to the

utility account holder. These variables are from a combination of public records, survey responses,

online and offline purchases, and statistical predictions, and most are likely measured with error.

Some households in the population could not be matched to demographic data, in which case we

use mean imputation. Neither attenuation bias nor imputation affects our arguments because we

do not use these variables to estimate unbiased covariances. Instead, we will use these covariates

primarily for prediction.

Table 1: Demographic Variable Summary Statistics

Variable Obs Mean SD Min Max

Baseline use (ccf/day) 19,898 2.09 1.64 0 16.0

Income ($000s) 15,557 94.4 81.9 10 450

Net worth ($000s) 15,557 195 288 -30 1500

House value ($000s) 19,927 215 192 0 2527

Education (years) 19,475 13.6 2.44 10 18

Male 16,811 0.51 0.50 0 1

Age 17,282 50.7 16.1 19 99

Retired 16,728 0.04 0.20 0 1

Married 15,406 0.59 0.49 0 1

Rent 17,561 0.30 0.46 0 1

Single family home 17,734 0.68 0.46 0 1

House age 14,885 59.7 40.2 0 115

Democrat 18,080 0.16 0.55 -1 1

Hybrid auto share 19,728 1.03 2.78 0 18.2

Green consumer 18,883 0.15 0.35 0 1

Wildlife donor 16,728 0.06 0.24 0 1

Profit score 19,784 0.00 1.00 -1.65 2.09

Buyer score 14,967 0.00 1.00 -2.03 1.47

Mail responder 17,734 0.47 0.46 0 1

Home improvement 16,728 0.13 0.33 0 1

Notes: This table summarizes the demographic variables. Baseline use is mean natural gas use (in hundred

cubic feet per day) between September 2013 and August 2014. Hybrid auto share is the Census tract average.

All other variables are from a demographic data provider.

These data may overestimate household income, but the population is relatively wealthy: ac-

cording to Census data, the mean household is in a census block group with median household

income of $64,000. Education is top-coded at 18 years for people with any graduate degree. Demo-

crat takes value 1 for Democrats and -1 for Republicans. Green consumer is a binary measure of

environmentalism based on income, age, and purchases of organic food, energy efficient appliances,

and environmentally responsible brands. Wildlife donor is an indicator for whether the consumer

has contributed to animal or wildlife causes. These two variables could proxy for environmentalism

and thus interest in energy conservation. Profit score and buyer score measure the consumer’s like-

16

lihood of paying debts and making purchases; we normalize both to mean 0, standard deviation 1.

Mail responder is an indicator for whether anyone in the household has purchased by direct mail.

Home improvement is an indicator for home improvement transactions or product registrations,

which could proxy for interest in making energy-saving improvements in response to HERs.

Our household covariates X are these same variables, except that we take natural logs of

income, net worth, house value, age, and house age.

10

Appendix Tables A1 and A2 confirm that

these covariates are not more correlated with HER recipient group or survey group assignment than

would be expected by chance.

Table 2 summarizes response rates. Households that were sent the follow-up mail survey were

more than twice as likely to respond as base group households, who only received the survey in

their final Home Energy Report. 899 households (9.5 percent of households that were surveyed)

responded to the mail survey, and 1690 households (17.9 percent) completed the phone survey.

2312 households (24.5 percent) responded to one or both surveys.

Table 2: Survey Response Rates

Response rate (%)

Mail survey 9.5

Base mail survey group 4.5

Follow-up mail survey group 12.0

Phone survey 17.9

Both mail and phone surveys 2.9

Mail and/or phone surveys 24.5

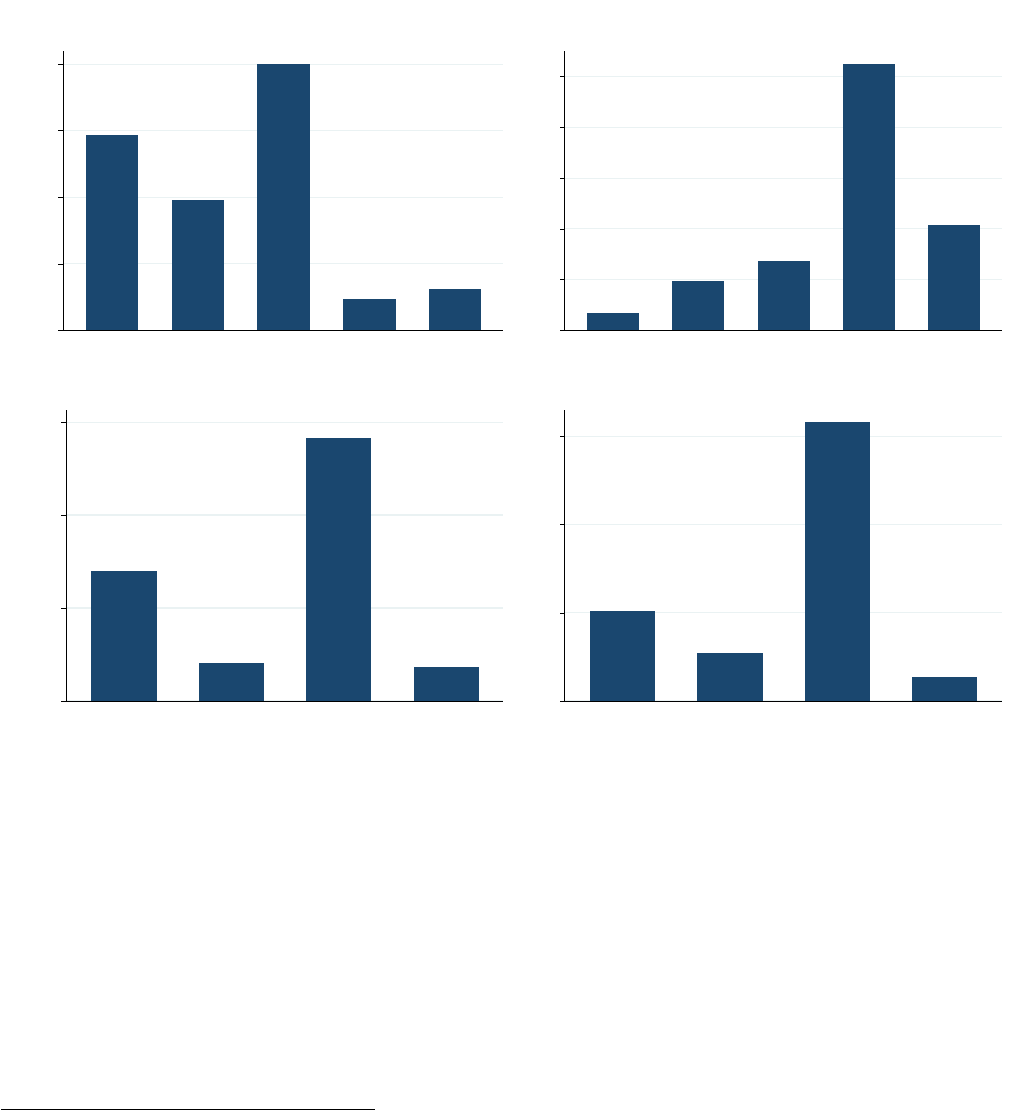

Figure 5 summarizes responses to the qualitative evaluations of the HERs from the phone survey.

Forty-nine percent would like HERs less if the neighbor comparisons were removed, against only 11

percent who would like them more. Seventy-three percent of respondents agree or strongly agree

that HERs provide useful information. For most respondents, the HERs did not generate positive or

negative affect: 57 percent said that the HERs made them feel neither “inspired” nor “pressured,”

and 63 percent said that HERs made them feel neither “proud” nor “guilty.” When the HERs did

induce some positive or negative affect, it was much more likely to be positive (inspired or proud)

instead of negative (pressured or guilty). These qualitative results suggest that most people “like”

HERs, i.e. that they would want HERs if they were free.

10

Some households have negative net worth, so before taking the natural log, we add a constant to all observations

such that the minimum value is $1.

17

Figure 5: Qualitative Evaluations of Home Energy Reports

0 10 20 30 40

Percent of respondents

Much

less

Somewhat

less

About

the same

Somewhat

more

Much

more

How would you like Reports without neighbor comparisons?

0 10 20 30 40 50

Percent of respondents

Strongly

disagree

Disagree Neither Agree Strongly

agree

The Reports gave useful information

0 20 40 60

Percent of respondents

Inspired Pressured Neither Both

Do the Reports make you feel ...

0 20 40 60

Percent of respondents

Proud Guilty Neither Both

Do the Reports make you feel ...

Notes: This figure presents qualitative evaluations of Home Energy Reports from the phone survey.

III.A Constructing Willingness-to-Pay

Complete and internally-consistent responses to the multiple price list allow us to place each re-

spondent’s willingness-to-pay into one of eight ranges. For simplicity, we assign one unique WTP

for each range. For the six interior ranges, we assign the mean of the endpoints. For example, we

assign a WTP of $-3 for all responses on [−5, −1] and a WTP of $0.50 for all responses on [0, 1]. For

the unbounded ranges, i.e. WTP less than $-9 or greater than $9, we assume that the conditional

distribution of WTP is triangular, with initial density equal to the average density on the adjacent

range.

11

This gives $14.48 and $-12.36, respectively, as the conditional mean WTPs on [9, ∞) and

11

For example, the density on [5, 9] is 2.49 percent of respondents per dollar, and the mass above $9 is 20.43 percent

of respondents. We assume that this 20.43 percent of respondents is distributed triangular on [9, ∞), with maximum

density of 2.49 percent per dollar at $9 decreasing to zero density above some upper bound. This gives an upper

18

(−∞ − 9]. We also present results under alternative assumptions.

For the 2.9 percent of households that responded to both the phone and mail surveys, we use

the phone survey WTP in order to be consistent with the phone survey’s additional qualitative

questions. This decision does not significantly affect our welfare estimates, because the mean WTP

for these households was almost identical on the mail and phone MPLs. 87 households returned

more than one mail survey with valid WTP; in these cases we use the first survey we received.

Ten households opted out during the program’s first year. These households will not receive

HERs in the program’s second year, so we will exclude them from the mean WTP estimation in

Table 6 and from the welfare analyses in Sections V-VI.

III.B Do the Surveys Correctly Measure Willingness-to-Pay?

While standard in academic economics and lab settings, multiple price list surveys are relatively

unusual in field settings. One concern in designing this study was that respondents would not

understand the MPL, rendering WTP estimates noisy or meaningless. We devoted substantial

effort to designing easily-understandable surveys and piloting the mail and phone instruments.

Table 3 shows that the vast majority of returned mail surveys were filled out in a way that allows

us to construct a valid WTP. 14.7 percent of mail surveys were incomplete, usually because the

respondent answered only one of the seven questions. 11.1 percent of phone respondents heard the

introduction to the MPL but terminated the interview before completing all three questions. Only

2.1 percent of mail MPL responses were complete and internally inconsistent. Three mail MPL

responses (0.3 percent) were both incomplete and internally inconsistent. Because the phone MPL

was shortened by not asking questions whose answers were implied by previous responses, there

was no opportunity to be internally inconsistent on the phone survey. These figures suggest that

consumers generally understood the MPL and gave meaningful answers.

12

Table 3: Multiple Price List Response Statistics

Mail Phone

Percent incomplete 14.7 11.1

Percent complete and internally inconsistent 2.1 N/A

Percent complete and internally consistent 83.2 88.9

bound of $25.43. The mean of WTP on [9, ∞) is thus $14.48. The mean WTP on (−∞ − 9] is determined by an

analogous calculation, given that the density on [−9, −5] is 1.25 percent per dollar and the mass below $-9 is 6.31

percent.

12

We listened to about 25 early phone survey interviews. Because the MPL questions are unusual, respondents

would sometimes pause to process the first question but would then provide a considered answer to that and the next

two MPL questions.

19

WTP is very strongly correlated with the qualitative assessments of the HERs from questions 3-

9 of the phone survey. As would be expected, WTP is strongly positively correlated with reporting

that future HERs would save them more money (question 3), feeling inspired and proud (questions

6 and 7), agreeing that HERs give useful information (question 8), and with positive additional

comments about the HERs (question 9).

13

Also as expected, WTP is strongly negatively correlated

with preferring that HERs not have neighbor comparisons (question 5) and with feeling pressured

(question 6). The only result that we did not expect was that feeling guilty is positively associated

with WTP, but the relationship is not significant after conditioning on predicted savings, which

suggests that consumers do not like guilt per se – they like guilt only because it helps them reduce

expenditures. See Appendix Table A4 for formal results.

As we detail below, 35 percent of respondents reported negative WTP. In Appendix Table A5,

we confirm that negative WTP is strongly associated with the same set of qualitative assessments in

expected ways. Furthermore, all six households that opted out and also responded to the survey had

negative WTP. These strong correlations build confidence that both the MPL and the qualitative

questions elicited meaningful responses.

87 households returned more than one mail survey with valid WTP. These could have been filled

out by different people in the same household, or by one person who wanted to ensure that his or

her response was received. Thus, one might expect responses to be correlated, but not perfectly

correlated. WTP is indeed very highly correlated across the two responses within these households,

implying that people understood the mail MPL enough that responses were consistent within a

person or household. See Appendix Table A6 for formal results.

277 households responded to both the phone and mail surveys, of which 224 have valid WTP

from both surveys and 259 responded to the belief update question on both surveys. Because the

phone survey called for skipping these questions if the respondent reported already returning the

mail survey, it seems likely that duplicate mail and phone responses came from different people

in the same household. Here again, one might thus expect responses to be correlated, but not

perfectly correlated. Appendix Table A6 confirms this: WTP, an indicator for negative WTP, and

belief updates are all strongly correlated within household across the mail and phone surveys. WTP

and answers to the belief update question within household are almost equally strongly correlated

across the mail and phone surveys, which suggests that the MPL questions to elicit WTP were no

more confusing or cognitively demanding than the belief update question, where responses were on

the familiar Likert scale. Across the 224 households with valid WTP from both surveys, the mean

13

456 phone survey respondents offered comments in response to question 9. Of these, 170 were positive, such as

“They’re terrific. I like the way they’re laid out and easy to understand,” and “I think you did it right. It has all

the information owners need. I think it’s an excellent idea,” and “Detailed and a great thing. Helps me monitor my

usage.” 213 were neutral, often including complaints about high energy prices. 73 were negative, such as “I do not

understand it; it does not make sense,” and “It’s a waste of paper. If they did not send those reports maybe they

could lower the delivery charges,” and “The money would be better spent reducing the cost of energy rather than

sending the reports.”

20

WTP and the share of negative WTPs are almost exactly identical between the mail and phone

surveys. This implies that neither survey format generated an idiosyncratic bias in mean WTP.

In general, these results suggest that respondents understood the MPLs and that the survey

instruments correctly elicited WTP. Here we address some remaining reasons why that might not

be the case.

First, time discounting could affect WTP. For example, if respondents have annual discount

rates of six percent and thought that checks would arrive six months before the HERs’ benefits,

their WTP would be about three percent lower than if they thought that checks would arrive at the

same time as the benefits. Such a small difference would not be enough to meaningfully affect the

welfare calculations below. Conceptually, we want all components of welfare to be discounted to

the time at which the implementation costs are incurred for the second year of HERs. In practice,

the checks will arrive in late 2015, although we intentionally did not say this on the survey because

we did not want to make time discounting salient.

Second, WTP might be lower if paying out of pocket instead of trading off against an unexpected

windfall from a check. If this results from a behavioral bias, it is not obvious what WTP to respect

for welfare analysis.

Third, WTP might be higher with per-month subscription pricing instead of a one-time check.

Because the monetary amounts are small and respondents pay for HERs from a future windfall

instead of from their existing funds, it is unlikely that credit constraints could explain a preference

for subscription payments. If WTP differs with subscription pricing vs. a one-time check due to a

behavioral bias such as focusing bias (Koszegi and Szeidl 2013), it is not clear that the subscription

pricing WTP would be the one to respect for welfare analysis.

Fourth, Beauchamp et al. (2015) demonstrate a compromise effect in multiple price lists – that

is, that people tend to favor the middle option of an MPL. Because our phone MPL questions

were given sequentially, however, this concern does not apply to our phone MPL. Furthermore,

for the households that responded to both phone and mail MPLs, the mean WTPs from the

two instruments are indistinguishable. This suggests that the mail MPL is also unaffected by a

compromise effect.

Models of contextual inference such as Kamenica (2008) suggest two reasons why our mail MPL

would not be biased by a compromise effect. First, there is little imperfect information: the MPL

asks simple questions about a familiar good and, unlike Beauchamp et al. (2015), there are no risky

prospects that could increase cognitive complexity. Second, consumers were unlikely to infer that

they are “middlebrow” relative to the bounds of the MPL: the distribution of responses suggests

that the first two questions had relatively obvious answers (very few people were willing to pay

significant amounts to avoid HERs) while the last two questions did not (many people were in the

top two WTP ranges).

21

IV Empirical Analysis

In this section, we estimate parameters needed for the welfare analysis prescribed by Equation

(6). We begin by estimating the treatment effects on energy use, which determine the externality

benefits and profit losses. We then calculate average WTP, which will be our measure of the

consumer welfare effects.

IV.A Effects on Energy Use

To estimate the effect of Home Energy Reports on energy use, we limit the sample to post-

treatment data and control for pre-treatment usage, allowing the coefficient to vary over time.

14

Post-treatment is defined as any meter read after the household’s first HER was generated. The

first HERs were generated on October 13th, 2014, and first HERs had been generated for 98 percent

of households by December 8th. We also observe generation dates for HERs that would have been

sent to the control group.

Y

it

is household i’s average natural gas use (in ccf/day) over the billing period ending on date

t, and R

i

is a recipient group indicator variable.

˜

Y

it

is the average usage during the billing period

ending 12 months prior, and ν

m

allows separate coefficients on

˜

Y

it

by month. ω

mq

is a vector of

indicators for baseline usage quartile interacted with the month containing date t. The estimating

equation is:

Y

it

= τR

i

+ ν

m

˜

Y

it

+ ω

mq

+ ε

it

. (7)

Standard errors are clustered by household to allow for arbitrary serial correlation.

Figure 6 presents estimates of the τ parameter, separately for each pair of months after the

baseline period ends on August 31, 2014. The several months of pre-treatment observations allow

us to test for spurious pre-treatment effects, and there are indeed zero statistical effects for meters

read in September and October. There are also zero statistical effects for meters read in November

and December. For the coldest part of winter, the billing periods ending in January through April,

the recipient group reduces gas use by about a 0.04 ccf/day. The standard errors for May 2015

widen out substantially because the final meter read in our current data is May 22nd, so there are

only 22 days of reads underlying that data point instead of a full two months.

14

Natural gas use is highly seasonal: average consumption drops below 0.5 ccf/day in the summer and rises above

4 ccf/day in the winter. Thus, controlling for seasonal fluctuations is crucial for improving statistical efficiency. Note

that estimating in logs and transforming the percent savings back into levels is not a consistent estimator of the level

of average savings due to Jensen’s Inequality. For this reason, Allcott (2011, 2015) and Allcott and Rogers (2014)

estimate effects in levels.

22

Figure 6: Effects of Home Energy Reports on Natural Gas Use

Treatment begins

-.15 -.1 -.05 0 .05

Treatment effect (ccf/day)

Sep 2014 Nov 2014 Jan 2015 Mar 2015 May 2015

Treatment effect 90% confidence interval

Notes: This figure presents estimates of Equation (7), allowing the treatment effect to vary by two-month

periods. Dependent variable is natural gas use in ccf/day, where “ccf” means hundred cubic feet. For

context, one ccf is worth about $0.98 at retail prices. Robust standard errors, clustered by household.

Table 4 presents estimates of Equation (7). Column 1 controls only for the 12-month lag usage

˜

Y

it

, and columns 2-4 progressively add controls. The estimates are very stable. In column 4, our

primary estimate of the average treatment effect through May 22nd is a 0.0278 ccf/day decrease.

This sums to $5.52 of retail natural gas cost savings through that date. Control group natural gas

use averages 3.66 ccf/day in the post-treatment period, so the treatment effect amounts to 0.76

percent of counterfactual use. In percent terms, this is substantially less than the typical effect of

HERs on electricity use (Allcott 2015), but Opower’s natural gas-focused programs typically have

smaller percent effects. Given the small sample relative to other HER programs, it is not surprising

that the t-statistics are around 1.8 instead of larger.

23

Table 4: Effects on Natural Gas Use in the Program’s First Year

(1) (2) (3) (4) (5)

-1(Report recipient) -0.0316 -0.0308 -0.0294 -0.0278 -0.0301

(0.0161)* (0.0162)* (0.0161)* (0.0162)* (0.0174)*

Observations 49,873 49,873 49,873 49,873 49,873

R

2

0.819 0.822 0.825 0.827 0.825

12-month lag usage Yes Yes Yes Yes Yes

Month indicators Yes Yes Yes Yes

12-month lag use×month Yes Yes Yes

Baseline use quartile×month Yes Yes

Weights Duration Duration Duration Duration Duration×

ˆ

P r(Responded|X)

Notes: This table presents estimates of Equation (7), using post-treatment data only. Dependent variable

is natural gas use in hundred cubic feet (ccf) per day. Control group sample mean usage is 3.66 ccf/day.

Robust standard errors, clustered by household, in parentheses. *, **, ***: statistically significant with 90,

95, and 99 percent confidence, respectively.

Column 5 presents estimates with the sample re-weighted on observables X to match the survey

respondents with valid WTP, using fitted probabilities from probit estimates in Appendix Table

A7. The effect is slightly – although not statistically significantly – larger, which suggests that

survey respondents have somewhat larger energy savings, perhaps because they are more engaged

with the HERs.

IV.B Willingness-to-Pay

Figure 7 presents the distribution of WTP, with separate bars for the mail vs. phone survey

responses. Fewer households responded via mail, so all mail bars are shorter. Mail respondents

also have slightly higher willingness to pay, with relatively less density in the negative range and

more in the positive range. Thirty-five percent of respondents reported weakly negative WTP,

although most of that group is close to indifferent: 56 percent of negative WTPs are between $0

and $-1. This dispersion in WTP, and in particular the result that a meaningful share of the

population is willing to pay to avoid being nudged, will motivate the analysis of opt-in programs

and targeting in Section VI.

24

Figure 7: Willingness-to-Pay for Home Energy Reports

0 5 10 15

Percent of respondents

-9 or less [-9,-5] [-5,-1] [-1,0] [0,1] [1,5] [5,9] 9 or more

Mail Phone

Notes: This figure presents the histogram of willingness-to-pay for four more Home Energy Reports, with

all survey responses weighted equally.

Table 5 presents correlates of WTP. To simplify the presentation of the many X covariates,

column 1 presents the post-Lasso estimator – that is, we use Lasso for variable selection, then

present the OLS regression of WTP on the selected covariates; see Belloni and Chernozhukov

(2013). The correlations are intuitive: point estimates suggest that income and buyer score are

positively associated with WTP, retirees have lower WTP, and renters have lower WTP, likely

because they do not have the ability or incentive to make energy-saving capital stock changes in

response to HERs. People who have donated to animal and wildlife causes have higher WTP,

perhaps because this proxies for interest in environmental conservation.

We carry out the welfare analysis for two populations: the subset of households that responded

to the MPL, and the entire set of households in the HER recipient group. The latter welfare

calculation requires extrapolating from respondents to non-respondents. Our primary approach to

extrapolating to the full HER recipient population is to use inverse probability weights (IPWs)

to re-weight the sample of respondents with valid WTP to match the full recipient population on

observables. See Appendix Table A7 for the probit estimates used for this reweighting.

25

Table 5: Correlates of WTP and Their Correlation with Response

(1) (2)

Dependent variable: WTP Have WTP

Baseline use (ccf/day) 0.0912 0.0120

(0.101) (0.00897)

ln(Income) 0.0427 0.0254

(0.243) (0.0227)

Retired -1.905 0.103

(0.836)** (0.0798)

Married 0.684 -0.0108

(0.416)* (0.0370)

Rent -0.724 -0.109

(0.444) (0.0399)***

Single family home 0.289 0.0569

(0.425) (0.0384)

Wildlife donor 1.027 0.226

(0.623)* (0.0652)***

Buyer score 0.296 0.0438

(0.221) (0.0199)**

Observations 2137 9439

Notes: Column 1 presents estimates from a post-Lasso estimator, in which OLS is run on covariates

selected by Lasso, using equally-weighted observations. For the Lasso estimates only, each variable was

normalized to standard deviation one. Column 2 presents marginal effects probit estimates from a model

where the same selected covariates are used to predict whether a household responds to a survey and has

valid WTP. Robust standard errors in parentheses. *, **, ***: statistically significant with 90, 95, and 99

percent confidence, respectively.

To give intuition for how re-weighting on observables will affect estimated WTP, column 2 of

Table 5 presents marginal effects probit estimates of how the WTP predictors from column 1 are

associated with whether a household responds and has valid WTP. The fact that most coefficients

have the same signs in columns 1 vs. 2 suggests that survey responders are positively selected on

observables. One mechanism that works against this is that retirees have lower WTP but are more

likely to respond to surveys.

Table 6 presents estimates of mean WTP, with standard errors in parentheses. Column 1

presents unweighted estimates, while column 2 uses row-specific IPWs to weight each row’s sample

to match the full HER recipient group on observables. Mail survey responses are divided in two

different ways: households randomly assigned to the base vs. follow-up groups and households that

actually returned the first survey vs. the follow-up survey. The bottom row of Panel A reports

that the unweighted mean WTP for the 24.5 percent of households that returned the survey is

$2.98. When re-weighted on observables to match the full recipient population, the mean falls to

$2.85, confirming that respondents are slightly positively selected on observables. We use this row

26

of estimates as the base case for welfare analysis.

Table 6 shows that respondents to the first mail survey are positively selected. Unweighted

mean WTP is marginally significantly higher for the randomly-assigned base group vs. follow-up

group ($4.33 vs. $3.22, p ≈ 0.117), and mean WTP is much higher for households in either group

that returned the first mail survey vs. those that returned only the follow-up survey ($4.37 vs.

$2.58, p ≈ 0.001). This positive selection is almost mechanical: people who do not open and read

HERs likely have WTP closer to zero than people who do, and the former group would not have

even seen the first mail survey.

Table 6: Estimates of Mean Willingness-to-Pay

(1) (2)

Unweighted Weighted

Panel A: Mean WTP

Mail 3.40 3.29

(standard error) (0.26) (0.29)

Base group 4.33 3.66

(0.57) (0.61)

Follow-up group 3.22 3.14

(0.29) (0.33)

Returned first survey 4.37 4.02

(0.35) (0.42)

Returned follow-up survey 2.58 2.64

(0.37) (0.41)

Phone 2.79 2.70

(0.18) (0.19)

Combined 2.98 2.85

(0.16) (0.16)

Panel B: p-Values of Differences

Base vs. follow-up mail 0.117 0.510

Returned first vs. returned follow-up mail 0.001 0.019

Mail vs. phone 0.059 0.080

Base group vs. phone 0.026 0.171

Follow-up group vs. phone 0.213 0.221

Returned first survey vs. phone 0.000 0.003

Returned follow-up survey vs. phone 0.606 0.894

Notes: Estimates in column 2 are re-weighted to match the HER recipient group on observables.

By contrast, the phone survey and follow-up mail survey, which was sent from a different

outbound address and was not part of an HER, are not subject to this form of positive selection.

Indeed, unweighted mean WTP is statistically and economically very similar for phone survey vs.

follow-up mail survey respondents ($2.79 vs. $2.58, p ≈ 0.606), and the weighted means are almost

27

identical ($2.70 vs. $2.64, p ≈ 0.894). This implies that these two samples are either not selected

from non-respondents or that they have the same sample selection bias despite coming from two

different forms of contact (mail vs. phone). Appendix Table A8 presents suggestive evidence in