MPI Progress For All

Hui Zhou

1

, Robert Latham

1

, Ken Raffenetti

1

,

Yanfei Guo

1

, and Rajeev Thakur

1

Argonne National Laboratory, Lemont, IL 60439, USA

Abstract. The progression of communication in the Message Passing

Interface (MPI) is not well defined, yet it is critical for application per-

formance, particularly in achieving effective computation and commu-

nication overlap. The opaque nature of MPI progress poses significant

challenges in advancing MPI within modern high-performance comput-

ing practices. First, the lack of clarity hinders the development of ex-

plicit guidelines for enhancing computation and communication overlap

in applications. Second, it prevents MPI from seamlessly integrating with

contemporary programming paradigms, such as task-based runtimes and

event-driven programming. Third, it limits the extension of MPI func-

tionalities from user space. In this paper, we examine the role of MPI

progress by analyzing the implementation details of MPI messaging. We

then generalize the asynchronous communication pattern and identify

key factors influencing application performance. Based on this analysis,

we propose a set of MPI extensions designed to enable users to construct

and manage an efficient progress engine explicitly. We compare our ap-

proach to previous efforts in the field, highlighting its reduced complexity

and increased effectiveness.

1 Intro duction

Overlapping computation and communication [1,13] is a key performance goal

in high-performance computing (HPC). Ideally, with 100% computation/com-

munication overlap, communication and synchronization become effectively free,

allowing parallel applications to scale perfectly. However, achieving this overlap

goal remains challenging. The Message Passing Interface (MPI), the de facto

communication runtime for HPC applications, does not precisely define how

communication progress is made. MPI guarantees that once communication is

initiated, it will complete, but it does not specify whether progress occurs during

the starting call (e.g., MPI Isend), the completion call (e.g., MPI Wait), or in be-

tween. To achieve effective computation/communication overlap, strong progress

from MPI is desirable [5], meaning that MPI can make progress between the

starting and completion calls without explicit MPI calls from the user. How-

ever, implementing strong progress poses constraints on MPI implementations

and is not always feasible. One approach is to employ a default asynchronous

progress thread [10]. However, due to missing context from the application, a

global progress thread often leads to performance issues and is generally not

arXiv:2405.13807v2 [cs.DC] 12 Jul 2024

considered a good solution. Thus, it remains important for applications to have

a strategy for managing MPI progress to achieve optimal performance.

Currently, applications have limited means to explicitly control MPI progress.

Using an MPI blocking call ensures that the operation completes before the

call returns, but it blocks the calling thread from performing computations.

With nonblocking MPI calls, an application can split an MPI operation into

a start and a completion call and perform computation in between. However,

depending on the implementation, the communication may be blocked until the

completion call rather than overlapping with the computation [4]. To improve the

communication/computation overlap, there needs to be a strategy to invoke MPI

progress regularly during computation. The current method for the application

to invoke MPI progress is via MPI Test, but MPI Test is tied to a specific MPI

request. Thus, designing a progress engine that includes MPI progress requires a

synchronization mechanism for managing MPI requests, which is often complex

and prone to inefficiency.

Asynchronous programming, including task-based [12] and event-driven pro-

gramming [2], is gaining popularity as modern computing architectures incor-

porate more cores and adopt more hybrid architectures. Asynchronous task-

based programming allows programmers to separate writing tasks from manag-

ing deployment and progression. Event-driven programming alleviates the need

to manage asynchronous handles and enables programmers to express tasks that

involve multiple asynchronous events. Compared to traditional MPI program-

ming, where programmers need to partition jobs from the start and explicitly

manage synchronization, these asynchronous programming styles significantly

reduce the complexity of parallel programming. However, MPI poses challenges

to working with these asynchronous programming styles. Task-based runtimes

need to manage MPI nonblocking operations via MPI requests and regularly

test for completion to maintain the states of individual tasks [11]. Similarly, to

act on MPI completion events, one needs to regularly test a set of active MPI

requests. The lack of interoperable MPI progress may lead to multiple test-yield

cycles that waste CPU cycles and cause contention on shared MPI resources.

As HPC enters the exascale era, MPI faces performance challenges on in-

creasingly hybrid node architectures. Achieving high performance with MPI im-

plementations is becoming more complex and challenging compared to hand-

tuned, non-portable solutions. Researchers need the ability to prototype MPI

algorithms and MPI extensions independently of MPI implementations. The

ROMIO project, which prototyped and implemented MPI-IO during MPI-2 stan-

dardization [14], is a good example of such an approach. Today, a potential area

for similar innovation is MPI collective operations. An algorithm for a collective

operation often involves a collection of communication patterns tied together by

a progression schedule. An optimized collective algorithm may integrate both

MPI communications and asynchronous local offloading steps, tailored to spe-

cific system configurations and application needs. Therefore, exposing and mak-

ing MPI progress interoperable with user-layer asynchronous tasks will stimulate

broader community research activities, driving future advancements in MPI. We

2

aim to address a common debate in MPI standardization meetings – whether a

proposed feature needs to be in MPI or whether it can be a library on top of

MPI. Exposing new progress APIs will facilitate more tightly-coupled libraries,

so more features can be built on top of MPI first, rather than directly added

into MPI before widespread adoption.

In this work, we introduce a set of MPI extensions to allow applications to

explicitly invoke MPI progress without tying to specific communication calls,

thereby enabling applications to manage MPI progress without the complexity

of handling individual MPI request objects. Our previously introduced MPIX

Stream concept [15] is used to target progress to specific contexts, avoiding mul-

tithreading contention issues associated with traditional global progress. We also

recognize that MPI progress can be extended to collate progress for asynchronous

tasks in general, simplifying the management of multiple progress mechanisms

and avoiding wasting cycles on maintaining multiple progress engines.

2 Anatomy of Asynchronous Tasks and Role of Progress

Before discussing how to manage progress, we need to define what is progress

and understand its role.

2.1 MPI’s Message Modes

To understand the role of progress, we examine how an MPI implementation

might send and receive messages. The following discussion is based on MPICH,

but we believe it is applicable to other MPI implementations as well.

Figure 1 illustrates various modes of MPI

Send and MPI Recv. When sending

a small message, the implementation may immediately copy the message to

the Network Interface Card (NIC)

1

and return, marking the send operation

as complete (see Figure 1(a)). While the actual transmission may still be in

progress, the send buffer is safe for the application to use. In MPICH, this is

called a lightweight send, which notably does not involve any wait blocks.

For larger messages, buffering costs can be significant. Instead, the message

buffer pointer is passed to the NIC, which transmits the message directly from

the buffer. MPI Send must wait until the NIC signals completion, as the mes-

sage buffer remains in use until then. This method, known as eager send mode,

involves a single wait block (see Figure 1(b)).

When messages are even larger, early arrival at the receiver can cause issues,

such as blocking the receiver’s message queue or necessitating temporary buffer

copying. To prevent unexpectedly receiving large messages, a handshake protocol

is used: the sender sends a Ready to Send (RTS) message and waits for the

receiver to post a matching buffer and reply with a Clear to Send (CTS) message.

The sender then proceeds to send the message data similarly to eager mode. This

rendezvous mode involves two wait blocks (see Figure 1(c)).

1

Here “NIC” loosely refers to either hardware operations or software emulations.

3

(a) Buffered send. (b) Eager send. (c) Rendezvous send.

(d) Eager unexpected receive. (e) Eager expected receive. (f) Rendezvous receive.

CPU Wait NIC

CPU NIC

initiate

finalize

buffer

CPU NIC

initiate

finalize

wait

CPU NIC

initiate

send data

finalize

wait

wait

RTS

CTS

data

CPU NIC

initiate

finalize

wait

CPU NIC

initiate

finalize

wait

CPU NIC

initiate

finalize

wait

wait

RTS

CTS

data

Fig. 1. Common communication modes: (a) Buffered eager send; (b) Normal eager

send; (c) Rendezvous send; (d) Receiving an eager message that arrived before posting

the receive; (e) Receiving an eager message that arrived after posting the receive; (f)

Receiving a rendezvous message.

MPI Recv operations vary as shown in Figure 1(d-f). Receiving an eager mes-

sage, including those sent via lightweight send, involves a single wait block re-

gardless of whether the message arrives before or after MPI Recv. Receiving a

rendezvous message requires two wait blocks.

Additional message modes with more complex protocols, such as pipeline

mode, may involve multiple wait blocks. In pipeline mode, a large message is

divided into chunks, and the implementation may control the number of concur-

rent chunks, leading to an indeterminate number of wait blocks.

2.2 Nonblocking and Asynchronous Task Patterns

The wait blocks in Figure 1 illustrate why MPI Send and MPI Recv are considered

blocking operations. By focusing on the wait blocks in the block diagram, we

can abstract tasks such as MPI Send and MPI Recv into three patterns, as shown

in Figure 2: tasks that do not wait, tasks that contain a single wait block, and

tasks with multiple wait blocks. During a wait block, the task is executed on

a hardware device such as a NIC or GPU, within the OS kernel, or within a

separate execution context such as a thread or process.

The wait block is often implemented as a busy poll loop, which wastes CPU

cycles while the offloaded task is still in progress. Conversely, if the offloaded task

4

Asynchronous Task Patterns

(a) (b) (c)

CPU Wait NIC

Fig. 2. Task patterns: (a) A task with

no blocking parts; (b) A task with a

single blocking part; (c) A task with

multiple blocking parts.

Nonblocking Start

Completion

(a) (b) (c)

CPU Wait NIC

Fig. 3. Nonblocking tasks: (a) A task

with no blocking parts; (b) A task with

a single blocking part; (c) A task with

multiple blocking parts.

finishes and the completion event is not immediately polled and acted upon, it

can delay subsequent dependent work, adding latency to the workflow.

Rather than immediately waiting for an asynchronous task to complete, a

program can, in principle, perform other jobs that do not depend on the pending

task. This is the idea behind MPI’s nonblocking APIs. A nonblocking operation

splits a corresponding blocking operation into two parts: starting and completion.

For example, MPI Send is divided into MPI Isend and MPI Wait.

Figure 3 illustrates how blocking patterns in Figure 2 are split into non-

blocking patterns. If the task does not contain any wait blocks (Figure 3(a)),

the split into a nonblocking pattern is somewhat arbitrary, but typically the

entire operation is completed in the starting call, and the completion call will

return immediately. If the task contains a single wait block (Figure 3(b)), it is

naturally split just before the wait block. For tasks with multiple wait blocks

(Figure 3(c)), the split occurs before the first wait. Generally, the starting call

should avoid any wait blocks to preserve the nonblocking semantics.

Viewing MPI operations through the lens of wait patterns generalizes MPI

nonblocking operations to common asynchronous programming patterns. For in-

stance, the async/await syntax [8] in some programming languages provides a

concise method to describe the wait patterns in a task. Event-driven program-

ming [2], on the other hand, expresses the code following the wait block as

event callbacks. In MPI, these async patterns are opaque, making MPI progress

management obscure.

2.3 Computation/Communication Overlap

One of the primary goals of using nonblocking MPI operations is to achieve over-

lap between computation and communication. Ideally, the CPU cycles spent in

5

Computation/Communicaton Overlap

(a) (b) (c)

CPU Wait

Compute

NIC

Fig. 4. Computation/communication

overlap: (a) Communication with no

blocking parts; (b) Communication

with single blocking part; (c) Commu-

nication with multiple blocking parts.

Progress Schemes

(a) (b)

CPU Test

Compute

NIC

Fig. 5. Remedies for the lack of

progress: (a) Intersperse progress tests

inside computations; (b) Use a ded-

icated thread to continuously poll

progress.

a wait loop should instead be used for computation, enhancing overall efficiency.

However, achieving this overlap with MPI is not straightforward.

The concept of computation/communication overlap is illustrated in Fig-

ure 4. Immediately after initiating a nonblocking operation, the program enters

a computation phase while the message data transmission is handled by the NIC

hardware or another offloading device. Once the computation phase completes,

the program resumes the wait for the nonblocking operation. If the communi-

cation has finished by then, the final wait returns immediately; otherwise, the

wait time is significantly reduced. This overlap maximizes efficiency, improving

overall performance and reducing time to solution.

The ideal overlap can be easily achieved for the case in Figure 4(b), where a

single wait in the nonblocking operation allows for effective overlap. In contrast,

Figure 4(a) shows a scenario with no wait block to save, offering no additional

overlap compared to the blocking case. Converting a blocking operation with-

out a wait into a nonblocking one only introduces overhead due to the creating

and finalizing of a task handle (i.e., an MPI request). However, in most MPI

implementations, this overhead is negligible. The situation is more complex in

Figure 4(c), where multiple wait blocks are present, and computation only over-

laps with the first wait block. This initial overlap is often insignificant compared

to the total combined wait time. For instance, in a simple rendezvous message,

the first wait involves waiting for a small protocol handshake, while the bulk

of the message transmission occurs during the second wait. As a result, the

opportunity for significant overlap is missed in such cases.

6

2.4 Role of Progress

The key issue with the scenario depicted in Figure 4(c) is the lack of progress.

After the first wait ends, a small block of code needs to run to initiate the sec-

ond wait. For asynchronous tasks with multiple wait blocks, this small block

of code after each wait block must run to trigger the subsequent asynchronous

tasks for the next wait. Polling for completion events and running the handlers

to initiate the following asynchronous tasks constitute progress. Without ade-

quate progress, the next steps in the asynchronous task are delayed, resulting in

degraded performance.

There are two remedies for this lack of progress. One is to intersperse

MPI Test calls within the computation, as illustrated in Figure 5(a). However,

this approach has at least three drawbacks. First, breaking the computation into

parts and interspersing it with progress calls significantly increases code com-

plexity and may not always be feasible. For example, the computation might be

encapsulated in an opaque function, or the bulk of it might be spent in a math

routine from an external library. Second, if progress polling is too frequent, many

polls will waste CPU cycles without benefit, and the context switching between

computation and progress polling adds overhead. Consequently, frequent polling

decreases performance. Third, if progress polling is too sparse, the likelihood of

polling just after a communication step completes is low, resulting in imperfect

computation/communication overlap.

An alternative solution is to use a dedicated CPU thread for polling progress.

This approach ensures sufficient progress and maximizes the overlap between

computation and communication. However, it also wastes CPU cycles when a

communication step is not ready and occupies an entire CPU core. While modern

HPC systems often have many cores, dedicating a CPU core for progress can be

acceptable for some applications. However, this becomes problematic when mul-

tiple processes are launched on a single node. If each process has its own progress

thread, it can quickly exhaust CPU cores and severely impact performance. Ad-

ditionally, applications may use other asynchronous subsystems besides MPI,

each potentially requiring its own progress thread, leading to competition for

limited core resources.

2.5 Managing MPI Progress

Implementing a progress thread with MPI is challenging, primarily because MPI

does not provide explicit APIs for invoking MPI progress. MPI progress is largely

hidden within the implementation, with the assumption that a system-optimized

MPI will provide strong progress, thereby reducing the need for explicit progress.

However, as discussed earlier, this assumption may not always hold true. Calling

any MPI function may or may not invoke MPI progress, and when it does, it

may not serve global progress. For instance, calling MPI Test on one MPI request

may not necessarily advance other MPI requests. Furthermore, any MPI function

that invokes progress may contend for locks with another thread calling MPI

7

functions. Managing MPI progress can feel almost magical when it works, but

extremely frustrating when it fails.

One of the more explicit ways to invoke MPI progress is by calling MPI Test

(or any of its variants). MPI Test requires an explicit MPI request parameter.

However, a dedicated progress thread is often isolated from the context that

initiates the actual MPI operations, making it challenging to synchronize MPI

requests between the computation threads and the progress thread. This syn-

chronization of MPI request objects imposes a significant burden on a many-task

system design. Therefore, to enable applications to build effective progress en-

gines, MPI needs to provide mechanisms for invoking progress independent of

specific MPI requests.

2.6 Collating Progress

In addition to the inconvenience of having to use an MPI request to call

MPI

Test, polling progress for each individual MPI requests is also inefficient. It

is more efficient to poll for all events, process them one by one, and then check

whether a specific MPI request has been completed from the event handling.

This is referred to as collating progress. Collating progress ensures that all parts

of the program are progressing towards completion, rather than waiting unneces-

sarily for each individual operation to complete sequentially. Collating progress

can help improve overall application performance by reducing bottlenecks and

enhancing concurrency.

In addition to collating progress for network operations, an MPI library in-

ternally needs to manage the progress of multiple asynchronous subsystems. For

example, data transfer may involve GPU device memory, meaning a conventional

MPI send and receive could include asynchronous memory copy operations be-

tween host and device memory. MPI-IO may introduce asynchronous storage

I/O operations. Collectives are often implemented as a series of nonblocking

point-to-point communications following a multi-stage pattern similar to Fig-

ure 3(c). Additionally, MPI communication may internally utilize different sub-

systems depending on whether the communication is between on-node processes

or inter-node processes. All these asynchronous subsystems require progress, and

it is often more convenient and efficient to collate them.

Listing 1.1 shows the pseudocode of MPICH’s internal progress function.

Listing 1.1. MPICH’s progress function

int MP ID I_ pr og re s s_ te st ( M P ID _P ro gr es s _s ta te * sta te ) {

int mpi _er rn o = MP I_SU CCE S S , ma de _p ro gr ess = 0;

/* a s yn ch r on o us da ta ty pe p ack / u npa ck */

Da ta ty p e_ en gi n e_ pr og re s s (& ma de _p ro gr es s );

if ( mad e_ pr og re ss ) goto fn _ ex it ;

/* c ol l ec ti v e a lg or i th ms */

Co ll ec t iv e_ sc h ed _p ro g re ss (& ma de _p rog re ss );

if ( mad e_ pr og re ss ) goto fn _ ex it ;

/* i nt r an od e s har ed m em or y c om m un i c a ti o n */

Sh me m_ pr og re ss (& mad e_ pr og re ss );

8

if ( mad e_ pr og re ss ) goto fn _ ex it ;

/* i nt e rn od e n etm od co mm u ni c at i on */

Ne tm od _p ro gr es s (& ma de _p ro gr es s );

if ( mad e_ pr og re ss ) goto fn _ ex it ;

fn_ exi t :

retu rn m pi_ er rno ;

}

This progress routine is called whenever an MPI function requires progress.

Collating progress assumes that the cost is negligible if a subsystem has no

pending tasks. For the datatype engine, collective, and shared memory (shmem)

subsystems, an empty poll incurs a cost equivalent to reading an atomic vari-

able. However, this is not always the case with netmod progress, so we place

netmod progress last and skip it whenever progress is made with other subsys-

tems. Additionally, MPICH’s progress function accepts a state variable from the

calling stack, providing an opportunity for the caller to tune the progress perfor-

mance according to the context. For example, from a context where only netmod

progress is needed, the progress state can be set to skip progress for all other

subsystems. Since MPI implementations already perform collated event-based

progress internally, exposing MPI progress as an explicit API for applications is

straightforward.

2.7 Case for Interoperable MPI Progress

As we have discussed, the patterns of MPI internal operations are similar to

those of general asynchronous tasks that applications may create. Therefore, the

design and optimization of MPI progress should be applicable to application-

layer asynchronous tasks as well. In fact, current MPI implementations already

handle several async subsystems internally, making it straightforward to extend

this capability to work with external tasks. We refer to this concept as “inter-

operable MPI progress.”

Interoperable MPI progress provides applications with a mature progress

engine, eliminating the need to create and maintain separate progression mech-

anisms for each new async system. Additionally, integrating user-layer progress

within MPI progress is more convenient and efficient.

Another advantage of interoperable MPI progress is that it allows for the

implementation and extension of MPI subsystems at the user level. For instance,

users could implement collectives in user space by adding a progress hook into

MPI’s progress, similar to the Collective sched progress in Listing 1.1. This

approach promotes a modular design where parts of MPI are built on top of a

core MPI implementation, enhancing both flexibility and stability. Furthermore,

a core MPI set that facilitates the building of MPI extensions can stimulate

broader community research activities and infuse new life into MPI.

9

3 MPICH Extensions to Enable Progress and

Event-Driven Programming

In this section, we present new extension APIs developed in MPICH that en-

able applications to more effectively manage MPI progress and to extend MPI

through interoperable MPI progress.

3.1 MPIX Streams

First, to address the issue of lacking execution context in MPI operations and

MPI progress, we utilize the MPIX Stream concept proposed in our previous

work[15]. An MPIX Stream represents an internal communication context within

the MPI library, defined as a serial execution context. All operations attached

to an MPIX Stream are required to be issued in a strict serial order, eliminating

the need for lock protection within the MPI library. This allows a multithreaded

application to achieve maximum parallel performance without the penalty of lock

contention. Additionally, MPIX Streams can be applied to progress, targeting

operations in a specific stream.

An MPIX Stream is created using the following API:

i n t MPIX S tr ea m create ( MPI Info i n f o , MPIX Stream ∗ s t re a m )

To use an MPIX Stream in MPI communications, you must first create a

stream communicator with the following function:

i n t MPIX Stream comm create (MPI Comm parent comm , MPIX Stream

stream , MPI Comm ∗ stream comm )

A stream communicator can be used the same way as a conventional MPI

communicator, except that all operations on a stream communicator will be as-

sociated with the corresponding MPIX Stream context. While an MPIX Stream

is naturally suited for a thread context, it can also be applied to any semanti-

cally serial construct. For example, the serial context can be manually enforced

through thread barriers, or originate from a specific runtime such as a CUDA

stream. Info hints offer a flexible mechanism for implementations to extend sup-

port and apply specific optimizations. For more detailed information on MPIX

Streams, please refer to our previous work[15].

3.2 Explicit MPI Progress

To address the need for making MPI progress without being tied to specific MPI

requests, We propose an API that allows applications to advance MPI progress

for a specific MPIX Stream:

i n t MP IX St rea m p rogre ss ( MPIX Stream s tre am )

10

If context separation is not a concern, the application can use the default

stream, MPIX STREAM NULL. However, using explicit MPIX Streams can help ap-

plications avoid unnecessary thread contention. Additionally, MPIX Streams can

be used to separate progress for different subsystems, especially if some subsys-

tems are sensitive to latency and need to avoid collating progress. For example, in

Listing 1.1, hints can be provided to the MPIX Streams to skip Netmod progress

if the subsystem does not depend on inter-node communication.

3.3 MPIX Async Extension

MPIX Stream progress allows applications to incorporate MPI progress into

their progression schemes. However, to make MPI progress truly interoperable,

we also need a mechanism for applications to add progress hooks into the MPI

progress system. This is accomplished with the following extension:

i n t MPI X As yn c st art ( M PI X A s y nc p o l l f u n c ti o n p o l l f n , void ∗

e x t r a s t a t e , MPIX Stream s tre am )

The poll fn parameter is a user-defined progress hook function that is called

from within MPI progress (e.g., inside MPIX Stream progress or MPI Test)

along with MPI’s internal progress hooks (See Listing 1.1). extra state is a

user-defined handle or a state pointer that will be passed back to poll fn. The

stream parameter attaches the task to the corresponding MPIX Stream, includ-

ing the default stream, MPIX STREAM NULL. poll fn has the following signature:

typedef s t r u c t MPIR Async thing ∗ MPIX Async thing ;

typedef in t ( MP I X A sy n c p o l l f u n c t io n ) ( MPIX Async thing ) ;

An opaque struct pointer, MPIX Async thing, is used instead of directly

passing extra state back to poll fn. This provides some flexibility for imple-

mentations to support more features. MPIX Async thing combines application-

side context (i.e., extra state) and the implementation-side context. Inside

poll fn, the original extra state can be readily retrieved with:

void ∗ MPIX A s y n c g et sta t e ( MPIX Async thing a s y n c t h i n g )

One example feature the implementation may support is to allow applications

to spawn additional async tasks while progressing a pending task inside poll fn.

This is accomplished with the following API:

void ∗ MPIX Async spawn ( MPIX Async thing as yn c t hi ng ,

M P I X A s y n c p o l l f un c ti o n p o l l f n , void ∗ e x t r a s t a t e ,

MPIX Stream str eam )

The newly spawned tasks are temporarily stored inside async thing and will

be processed after poll fn returns. This allows the implementation to avoid po-

tential recursion and the need for global queue protection before calling poll fn.

poll fn returns either MPIX ASYNC PENDING if the async task is in progress

or MPIX ASYNC DONE if the async task is completed. Before poll fn returns

MPIX ASYNC DONE, it must clean up the application context associated with the

11

async task, typically by freeing the structure behind extra state. The MPI

library will then free the context behind MPIX Async thing.

The MPIX Async interface allows users to extend MPI’s functionality and

integrate custom progression schemes into MPI progress. For example, an MPI

collective can be viewed as a fixed task graph composed of individual operations

and their dependencies. By defining poll fn, one can advance a specific task

graph for a custom collective algorithm within MPI progress. Integrating into

MPI progress simplifies the process by eliminating the need for constructing

separate progress mechanisms and avoiding performance issues such as managing

MPI request objects, extra progress threads, and thread contention.

3.4 Completion Query on MPI Requests

When a task finishes its computation for a given stage, it must wait for the com-

pletion of its dependent nonblocking operations. However, invoking redundant

progress in the presence of a progress engine is unnecessary and undesirable.

Instead of using MPI Wait, we need a request completion query function that

does not trigger progress. The following API provides this functionality:

b o o l M P I X R e q u e s t i s c o m p l e t e ( MPI Request r e q u e s t )

The implementation simply queries an atomic flag for the request, resulting

in minimal overhead when repeatedly polling this function. Importantly, there

are no side effects that would interfere with other requests or other progress calls.

MPIX Request is complete is also useful in the MPIX Async poll fn when the

application-layer task is built upon MPI operations. Each MPI progress may

internally use a context to coordinate various parts, thus, invoking progress

recursively inside the poll fn is prohibited.

3.5 Programming Scheme

Figure 6 illustrates how the above extension APIs fit together in a programming

scheme where the task contexts are separated from the progress engine. (Due to

space constraints, we do not provide a code listing.) In this scheme, program-

mers utilize asynchronous APIs, such as MPI nonblocking operations, to overlap

with computational work. However, to achieve maximum overlap, a progress

engine is generally required to ensure that asynchronous operations continue

to progress while the main threads are engaged in computation. By separat-

ing individual task contexts, such as MPI request objects, from the progress

engine, the design is simplified, and the additional latency that may occur

from synchronizing request objects between tasks and the progress engine is

avoided. MPIX Stream progress enables applications to invoke MPI progress

without requiring the use of request objects, thus separating task contexts.

MPIX Request is complete allows tasks to synchronize on asynchronous jobs

without invoking MPI progress, ensuring orthogonality between tasks and the

progress engine. Finally, MPIX Async extensions enable custom asynchronous

12

Fig. 6. Programming scheme that separates operation context and progress engine.

operations to be progressed by the MPI progress, thereby making MPI progress

interoperable with non-MPI asynchronous designs. Utilizing MPI progress to

advance all asynchronous operations simplifies the design of a progress engine

and makes optimizations, such as using MPIX Streams to avoid accidental con-

tention, generally accessible to the entire application.

4 Examples

In this section, we present various examples using the MPIX Async extensions.

An important metric for quantifying progress performance is the progress la-

tency, defined as the average elapsed time between a task’s completion and when

the user code responds to the event. We use a dummy task to directly measure

progress latency. Unless otherwise noted, our experiments were conducted on a

local workstation with an 8-core i7-7820X CPU.

4.1 Dummy task

For most of the following examples, we use a dummy task that completes after

a predetermined duration. This simulates an asynchronous job completed via

offloading. Instead of querying a completion status, we check for the elapsed

time. The code is provided in Listing 1.2. By presetting the duration for the

dummy task to complete, we can measure the latency of the progress engine’s

response to the completion event.

Listing 1.2. An example of dummy async task using MPIX Async extensions

#d ef i ne TASK DURATION 1 . 0

#d ef i ne NUM TASKS 10

st r uc t dummy state {

double w t i m e f i n i s h ;

} ;

13

s t a t i c i n t dummy poll ( MPIX Async thing th in g )

{

st r uc t dummy state ∗ p = M P I X Asyn c g e t s tate ( th i n g ) ;

double wtime = MPI Wtime ( ) ;

i f ( wtime >= p−>w t i m e f i n i s h ) {

/∗ d o u b l e d i f f = ( wtime − p−>w t i m e f i n i sh ) ∗ 1e6 ; ∗ /

f r e e ( p ) ;

return MPIX ASYNC DONE;

}

return MPIX ASYNC NOPROGRESS;

}

s t a t i c void a d d a s ync ( void )

{

st r uc t dummy state ∗ p = m al l o c ( s i z e o f ( st r uc t dummy state ) ) ;

p−>w t i m e f i n i s h = MPI Wtime ( ) + TASK DURATION ;

MPIX As yn c st ar t ( dummy poll , p) ;

}

i n t main ( i n t ar g c , const char ∗ ∗ a rgv )

{

MP I I n i t (NULL, NULL) ;

f o r ( i n t i = 0 ; i < NUM TASKS; i ++) {

ad d a s y n c ( ) ;

}

/∗ In t h i s example , M PI Fi nal ize w i l l s p i n pr o g re s s u n t i l a l l as ync

t a s k s c o m p l e tes ∗ /

M P I F i n a li z e ( ) ;

return 0 ;

}

In Listing 1.2, there is no active progress management since we only

care about the tasks being launched and completed without synchronization.

MPI Finalize will always continue progress until all asynchronous tasks are

done. To add synchronization, global states or references to synchronization vari-

ables are needed. This is demonstrated in Listing 1.3, where the new code from

the previous listing is highlighted. We also added a wait-loop after adding the

tasks. Since there is no computation in our example, we simply drive the progress

within the main thread.

Listing 1.3. Adding synchronization counter, wait-progress loop, and stubs for latency

benchmark

#d ef i ne TASK DURATION 1 . 0

#d e f i l e NUM TASKS 10

st r uc t dummy state {

double w t i m e f i n i s h ;

i n t ∗ c o u n t e r p t r ;

} ;

void a d d s ta t ( double la t e nc y ) ; /∗ impl e me n ta t io n om i t t ed ∗/

void r e p o r t s t a t ( void ) ; /∗ impl e me n ta t io n om i t t ed ∗/

s t a t i c i n t dummy poll ( MPIX Async thing th i n g )

{

st r uc t dummy state ∗ p = M P I X Asyn c g e t s tate ( t h i n g ) ;

double wtime = MPI Wtime ( ) ;

i f ( wtime >= p−>w t i m e f i n i s h ) {

a d d s t a t ( wtime − p−>w t i m e f i n i s h ) ∗ 1 e6 ;

14

( ∗ ( p−>c o u n t e r p t r ) ) −−;

f r e e ( p ) ;

return MPIX ASYNC DONE;

}

return MPIX ASYNC NOPROGRESS;

}

s t a t i c void a d d a s ync ( i n t ∗ c o u n t e r p t r )

{

st r uc t dummy state ∗ p = m al l o c ( s i z e o f ( st r uc t dummy state ) ) ;

p−>w t i m e f i n i s h = MPI Wtime ( ) + TASK DURATION ;

p−>c o u n t e r p t r = c o u n t e r p t r ;

MPIX As yn c st ar t ( dummy poll , p) ;

}

i n t main ( i n t ar g c , const char ∗ ∗ a rgv )

{

MP I I n i t (NULL, NULL) ;

i n t c ou nt er = NUM TASKS;

f o r ( i n t i = 0 ; i < NUM

TASKS ; i ++) {

ad d a s y n c (& c o un t e r ) ;

}

/∗ E s s e n t i a l l y a wait b l o c k ∗ /

while ( c ou nt er > 0) {

MP IX St rea m p ro gre ss (MPIX STREAM NULL ) ;

}

r e p o r t

s t a t ( ) ;

M P I F i n a li z e ( ) ;

return 0 ;

}

4.2 Performance Factors in Async Progress

Several performance factors influence the average response latency to event com-

pletion in asynchronous progress.

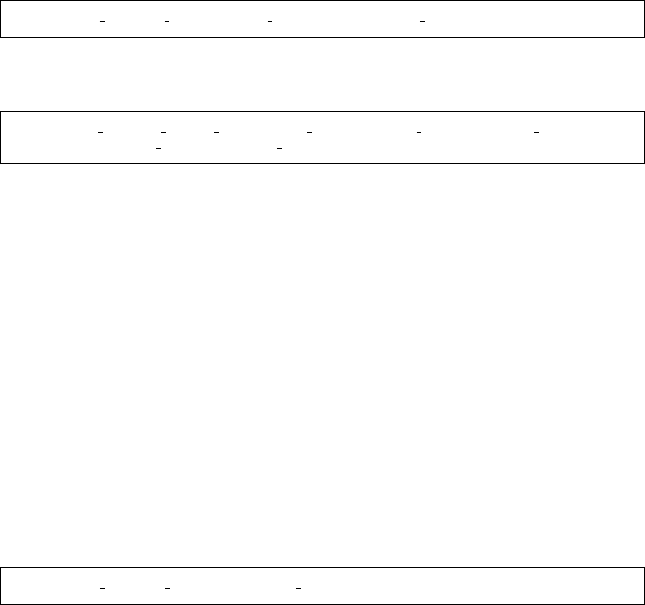

Number of pending tasks During each MPI progress call (e.g.,

MPIX Stream progress), MPI will invoke the async poll fn for each pending

async task sequentially. The cycles spent processing numerous tasks may delay

the response time to a specific task’s completion event. Therefore, as the num-

ber of pending tasks increases, we expect an increase in response latency. This

expectation is confirmed by the experimental results shown in Figure 7.

If all the pending tasks are independent, each progress call must invoke

poll fn for every pending task, leading to a performance degradation as the

number of pending tasks rises. Notably, when there are fewer than 32 pending

tasks, the latency overhead remains below 0.5 microseconds.

Most applications do not create thousands of independent tasks randomly.

Typically, tasks have dependencies on each other, forming a task graph, or they

are grouped into streams with implicit linear dependencies. When tasks have de-

pendencies, it is possible to skip polling progress for tasks whose dependent tasks

are not yet completed. While implementing a general-purpose task management

15

system to track dependencies is complex, we will demonstrate in 4.3 through an

example how users can manage task dependencies within their poll fn.

Fig. 7. Latency overhead in microsec-

onds as the number of pending async

tasks increases.

Fig. 8. Impact of poll function over-

head on event response latency. Each

measurement runs 10 concurrent pend-

ing tasks. The delay is implemented by

busy-polling MPI Wtime.

Poll Function Overhead In addition to the number of pending tasks, the

overhead of individual progress poll functions (poll fn) can also affect the aver-

age event response latency. If a single poll fn takes a disproportionate amount

of time to execute, the overall response time to events will increase. This effect

is illustrated in Figure 8, where we manually inserted delays in poll fn when

the task is still pending.

The MPIX Async interface is designed for lightweight poll fn functions and

is not suitable for tasks that require significant CPU cycles to respond to an

event. This is generally true for all collated progress mechanisms: when one part

of the progress takes significant overhead, it negatively impacts the performance

of other tasks.

To avoid heavy poll fn overhead, it is recommended to enqueue events and

postpone the heavy work outside of the progress callbacks. This approach en-

sures that the poll fn remains lightweight, minimizing its impact on overall

performance.

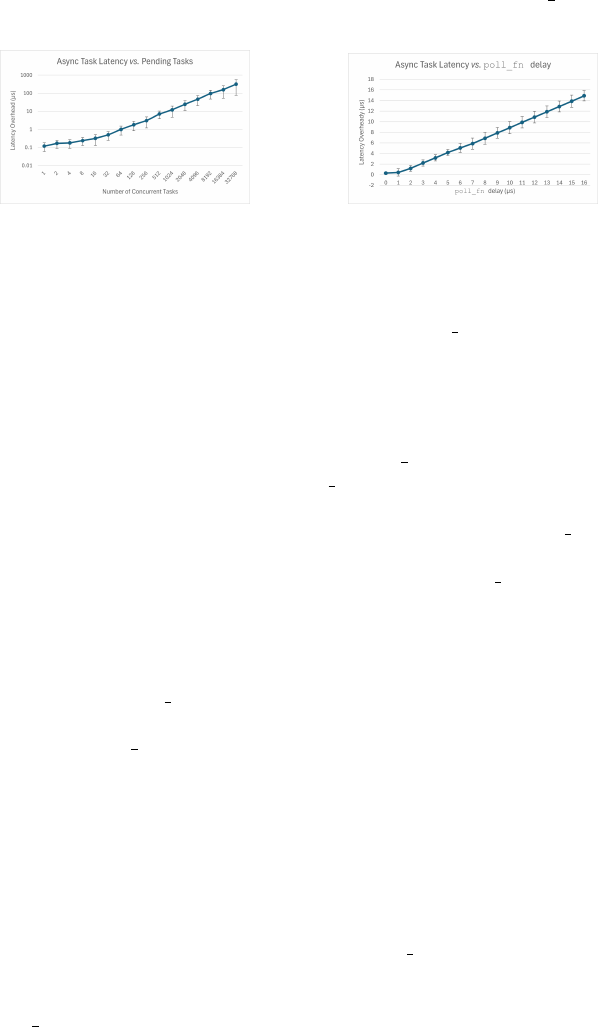

Thread Contention When multiple threads concurrently execute progress,

they contend for a lock to avoid corrupting the global pending task list. Even if

the tasks are independent, multiple threads running collated progress will still

contend for locks, leading to performance degradation. As illustrated in Figure 9,

the observed latency increases with the number of concurrent progress threads.

It’s important to note that individual poll fn functions may access

application-specific global states that require lock protection from other parts

of the application code. This lock protection should be implemented within

the poll fn by the application. MPI only ensures thread safety between MPI

progress calls.

16

To avoid performance degradation, it is advisable to limit the number

of progress threads–a single progress thread often suffices. Sometimes, MPI

progress loops are invoked implicitly. For example, blocking calls in MPI, such

as MPI Recv, often include implicit progress similar to MPI Wait. Even initiation

calls such as MPI Isend may contend for a lock with the MPI progress thread.

This global lock contention contributes to the notorious poor performance of

MPI THREAD MULTIPLE [15].

Using MPIX Stream appropriately can mitigate the issue of global thread

contention. We will demonstrate this in 4.4.

Fig. 9. Latency overhead in microsec-

onds as the number of concurrent

progress threads increases. Each mea-

surement runs 10 concurrent pending

tasks.

Fig. 10. Latency versus the number of

pending tasks when the progress call-

back only checks the task at the top of

the queue.

4.3 Async task class

To avoid wasting cycles polling progress for tasks with pending dependencies, it

is essential to track task dependencies. This can be managed either within MPI

implementations or inside the callback poll fn. However, MPI implementations

can only handle general task graphs with potentially unbounded complexity,

while applications often have much simpler dependency structures. Therefore, it

is simpler and more effective for applications to manage task dependencies within

the poll fn. Instead of polling progress for individual asynchronous tasks, users

can design task subsystems or asynchronous task classes. Within the poll fn,

they can poll progress for the entire task class.

In Listing 1.4, instead of calling MPIX Async start for each task individually,

the tasks are enqueued and managed within the application. A single callback,

class poll, is employed to manage the entire task queue. For simplicity, we

assume all tasks are to be completed in order. Therefore, class poll only needs

to check the completion status of the task at the top of the queue.

Listing 1.4. An example code for managing an entire task queue

st r uc t t a sk {

17

double wtime en d ;

st r uc t t a sk ∗ n ext ;

} ;

st r uc t t a sk ∗ head , ∗ t a i l ;

/∗ NOTE: i f t a s k s ar e to be added and p r o gr es se d from mu l t i p l e

thr e a d s , t he g l o b a l t a sk queue w i l l need lo c k p r o t e c ti o n .

∗/

s t a t i c i n t c l a s s p o l l ( MPIX Async thing th i n g )

{

double tm = MPI Wtime ( ) ;

while ( head && tm >= head−>wtime end ) {

st r uc t t a sk ∗ p = head ;

head = head−>n ext ;

f r e e ( p ) ;

}

i f ( head == NULL) {

return MPIX ASYNC DONE;

} e l s e {

return MPIX

ASYNC NOPROGRESS;

}

}

s t a t i c void a d d a s ync ( void )

{

st r uc t t a sk ∗ p = m a l l oc ( s i z e o f ( s tr u ct t as k ) ) ;

p−>wtim e end = MPI Wtime ( ) + INTERVAL ;

p−>nex t = NULL;

i f ( head == NULL) {

head = p ;

t a i l = p ;

} e l s e {

/∗ sim p l y append ∗/

t a i l −>ne x t = p ;

t a i l = p ;

}

}

i n t main ( i n t ar g c , const char ∗ ∗ a rgv )

{

MP I I n i t (NULL, NULL) ;

i n t co unt = 1 0 ;

f o r ( i n t i = 0 ; i < cou nt ; i ++) {

ad d a s y n c ( ) ;

}

MPIX As yn c st ar t ( c l a s s p o l l , head ) ;

while ( head ) MP IX St rea m p ro gre ss (MPIX STREAM NULL) ;

M P I F i n a li z e ( ) ;

return 0 ;

}

As shown in Figure 10, the average latency stays constant (within measure-

ment noise) regardless of the number of pending tasks.

4.4 Concurrent progress streams

If running multiple progress threads is necessary, or if there are multiple task

systems where collating progress might affect each other’s latency, one should

consider using MPIX Stream to avoid contention between the various threads.

18

Listing 4.4 demonstrates the use of MPIX streams to scale up the application

with multiple threads. We create a separate MPIX stream for each thread. Each

thread uses its own stream in MPIX Async start and MPIX Stream progress,

thereby avoiding lock contention between threads.

Listing 1.5. An example code using MPIX Streams to manage multiple progress

threads

#d ef i ne NUM TASKS 10

st r uc t dummy state {

double w t i me co m p l ete ;

i n t ∗ c o u n t e r p t r ;

} ;

s t a t i c i n t dummy poll ( MPIX Async thing th i n g )

{

st r uc t dummy state ∗ p = M P I X Asyn c g e t s tate ( t h i n g ) ;

i f ( MPI Wtime ( ) >= p−>wt i m e com p l e te ) {

( ∗ ( p−>c o u n t e r p t r ) ) −−;

f r e e ( p ) ;

return MPIX ASYNC DONE;

}

return MPIX ASYNC NOPROGRESS;

}

s t a t i c void a d d a s ync ( i n t ∗ c o u n te r p t r , MPIX Stream s t re a m )

{

st r uc t dummy state ∗ p = m al l o c ( s i z e o f ( st r uc t dummy state ) ) ;

p−>w tim e c o mple t e = MP I Wtime ( ) + INTERVAL + rand ( ) ∗ 1e−5 /

RAND MAX;

p−>c o u n t e r p t r = c o u n t e r p t r ;

MPIX As yn c st ar t ( dummy poll , p , s tr e am ) ;

}

MPIX Stream s t re a m s [MAX THREADS ] ;

void ∗ t h r e a d f n ( void ∗ arg )

{

i n t t h r e a d i d = ( i n t ) ( i n t p t r t ) ar g ;

MPIX Stream str eam = s tr e a m s [ t h r e a d i d ] ;

i n t c ou nt er = NUM TASKS;

f o r ( i n t i = 0 ; i < NUM TASKS; i ++) {

ad d a s y n c (& c ounte r , s tr e am ) ;

}

while ( c ou nt er > 0) MPIX St rea m pro gre ss ( st r ea m ) ;

}

i n t main ( i n t ar g c , const char ∗ ∗ a rgv )

{

i n t p r ov i d ed ;

M P I I n it t h r e a d (NULL, NULL, MPI THREAD MULTIPLE, &p ro v i de d ) ;

a s s e r t ( p r o v i de d == MPI THREAD MULTIPLE) ;

i n t num threa ds = 1 0 ;

f o r ( i n t i = 0 ; i < nu m t hr ea ds ; i ++) {

MPIX S tr ea m create (MPI INFO NULL , &s t r e a m s [ i ] ) ;

}

p t h r ea d t t h r e a d i d s [MAX THREADS ] ;

f o r ( i n t i = 0 ; i < nu m t hr ea ds ; i ++) {

p t h r e a d c r e a t e (& t h r e a d i d s [ i ] , NULL,

t h re a d f n , ( void ∗ ) ( i n t p t r t ) i ) ;

}

19

f o r ( i n t i = 0 ; i < nu m t hr ea ds ; i ++) {

p t h r e a d j o i n ( t h r e a d i d s [ i ] , NULL) ;

}

f o r ( i n t i = 0 ; i < nu m t hr ea ds ; i ++) {

MPIX S tr ea m free(& s t re a m s [ i ] ) ;

}

M P I F i n a li z e ( ) ;

return 0 ;

}

As shown in Figure 11, the average progress latency does not increase sig-

nificantly as the number of threads increases. The slight increase in latency is

within measurement noise and is likely attributable to core power fluctuations

due to the number of active cores.

Fig. 11. Latency versus the number of

concurrent progress threads using dif-

ferent MPIX streams. Each measure-

ment runs 10 concurrent pending tasks.

Fig. 12. Overhead of generating re-

quest completion events via explicit

queries.

4.5 Request Completion Callback

While the MPIX Async API does not directly provide event callbacks for an

event-driven programming style, generating events within the progress hook

(i.e., poll fn) is straightforward. The following example demonstrates using

the MPIX Async API and MPIX Request is complete to generate MPI request

completion events.

Listing 1.6. An example of implementing callbacks upon completion of MPI requests

i n t n u m r e q u e s t s ;

MPI Request r e q u e s t a r r a y [ MAX REQUESTS ] ;

s t a t i c i n t dummy poll ( MPIX Async thing th i n g )

{

i n t num pending = 0 ;

f o r ( i n t i = 0 ; i < n u m r e q u e s t s ; i ++) {

i f ( r e q u e s t a r r a y [ i ] == MPI REQUEST NULL) {

/∗ NOOP ∗/

} e l s e i f ( M P I X R e q u e s t i s c o m p l ete ( r e q u e s t a r r a y [ i ] ) ) {

20

/∗ c a l l on−co m p l eti o n a c t i o n s ∗/

MP I R e qu e s t f re e (& r e q u e s t a r r a y [ i ] ) ;

} e l s e {

num pending++;

}

}

i f ( num pending == 0 ) {

return MPIX ASYNC DONE;

}

return MPIX ASYNC NOPROGRESS;

}

The MPI requests are completed within MPI’s native progress, as shown in

Listing 1.1. Thus, using a separate query loop to generate request comple-

tion events is less efficient than directly generating events within MPI’s na-

tive progress when the requests are first completed. However, the overhead of

MPIX Request is complete is usually just an atomic read instruction, making

it acceptable as long as the number of pending requests is not too large.

Figure 12 measures the overhead of querying requests using the for-loop in

Listing 1.6. The overhead remains within the measurement noise when there are

fewer than 256 pending requests.

4.6 Synchronization via Generalized Request

The MPIX Async API perfectly complements MPI generalized requests. MPIX

Async provides a progression mechanism for asynchronous tasks, while MPI

generalized requests offer a tracking handle—an MPI request—that can be used

with functions like MPI Wait. Together, they enable the extension of MPI func-

tionality at the application layer.

The following example demonstrates how MPIX Async is used in conjunction

with MPI generalized requests.

Listing 1.7. An example of using MPI generaized request and MPIX Async

st r uc t dummy state {

double w t i me co m p l ete ;

MPI Request g re q ;

} ;

s t a t i c i n t dummy poll ( MPIX Async thing th i n g )

{

st r uc t dummy state ∗ p = M P I X Asyn c g e t s tate ( t h i n g ) ;

i f ( MPI Wtime ( ) > p−>wt i m e comp l e t e ) {

MP I Gre q ues t com p let e ( p−>gre q ) ;

f r e e ( p ) ;

return MPIX ASYNC DONE;

}

return MPIX ASYNC NOPROGRESS;

}

s t a t i c i n t q u e r y f n ( void ∗ e x t r a s t a t e , M PI Statu s ∗ s t a t u s ) { return

MPI SUCCESS ; }

s t a t i c i n t f r e e f n ( void ∗ e x t r a s t a t e ) { return MPI SUCCESS ; }

s t a t i c i n t c a n c e l f n ( void ∗ e x t r a s t a t e , i n t co m p l e te ) { return

MPI SUCCESS ; }

void main ( void ) {

MP I I n i t (NULL, NULL) ;

21

MPI Request g re q ;

M P I G r e q ue st s t ar t ( q u er y f n , f r e e f n , c a n c e l f n , NULL, &g r e q ) ;

st r uc t dummy state ∗ p = m al l o c ( s i z e o f ( ∗ p ) ) ;

p−>w tim e c o mple t e = MP I Wtime ( ) + INTERVAL ;

p−>g r e q = g r e q ;

MPIX As yn c st ar t ( dummy poll , p , MPIX STREAM NULL) ;

MPI Wait(&greq , MPI STATUS IGNORE) ;

M P I F i n a li z e ( ) ;

}

For simplicity, dummy query fn, free fn, and cancel fn functions are used.

Calling MPI Wait on a generalized request effectively replaces the manual wait

loop shown in Listing 1.3.

4.7 User-level collective algorithms

One of the motivations behind the MPIX Async extension is to enable user-level

implementation of collective algorithms with performance comparable to native

implementations. Collective algorithms are essentially a collection of communi-

cation patterns built on a core set of operations, including MPI point-to-point

operations, buffer copies, and local reductions.

A key advantage of native collective implementations is their tight integration

with the MPI progress. MPIX Async is designed to provide the same level of

integration to user-level applications.

Below, we present an example of implementing a user-level allreduce algo-

rithm using the MPIX Async APIs. This example implements the recursive dou-

bling allreduce algorithm[9]. For simplicity, the datatype is restricted to MPI INT,

the op to MPI SUM, and the number of processes to a power of 2.

Listing 1.8. An example of a user-level allreduce algorithm

st r uc t m y a l lr e du ce {

i n t ∗ buf , ∗ tmp buf ;

i n t co unt ;

MPI Comm comm;

i n t rank , s i z e ;

i n t t a g ;

i n t mask ;

MPI Request r e q s [ 2 ] ; /∗ send re q u e st and recv r eq u e s t f o r each

round ∗ /

b o o l ∗ d o n e p t r ; /∗ e x t e r n a l c o m plet i o n f l a g ∗ /

} ;

s t a t i c i n t m y a l l r e d u c e p o l l ( MPIX Async thing th in g )

{

st r uc t m y a l lr e du ce ∗ p = M P I X A sync g e t s t a te ( t hi n g ) ;

i n t r eq d o ne = 0 ;

f o r ( i n t i = 0 ; i < 2 ; i ++) {

i f ( p−>r e q s [ i ] == MPI REQUEST NULL) {

r e q d o n e++;

} e l s e i f ( M P I X R e q u e s t i s c o m p l ete ( p−>r e q s [ i ] ) ) {

MP I R e qu e s t f re e (&p−>r e q s [ i ] ) ;

r e q d o n e++;

}

}

22

i f ( r eq d o ne != 2) {

return MPIX ASYNC NOPROGRESS;

}

i f ( p−>mask > 1) {

f o r ( i n t i = 0 ; i < p−>co unt ; i ++) {

p−>buf [ i ] += p−>tmp buf [ i ] ;

}

}

i f ( p−>mask == p−> s i z e ) {

∗ ( p−>d o n e p t r ) = t r u e ;

f r e e (p−>tmp buf ) ;

f r e e ( p ) ;

return MPIX ASYNC DONE;

}

i n t d st = p−>ran k ˆ p−>mask ;

MP I I rec v ( p−>tmp buf , p−>count , MPI INT , d st , p−>tag , p−>comm, &p−>

r e q s [ 0 ] ) ;

MPI

Isend ( p−>buf , p−>count , MPI INT , d st , p−>tag , p−>comm, &p−>r e q s

[ 1 ] ) ;

p−>mask <<= 1 ;

return MPIX ASYNC NOPROGRESS;

}

void My Allreduce ( const void ∗ se ndb uf , void ∗ r ec v b u f , i n t count ,

MPI Datatype dat a ty p e , MPI Op op , MPI Comm comm)

{

i n t rank , s i z e ;

MPI Comm r ank (comm, &ra nk ) ;

MPI Comm size (comm, &s i z e ) ;

/∗ only d e a l wi t h a s p e c i a l c a s e ∗/

a s s e r t ( sendbuf == MPI IN PLACE && datatype == MPI INT && op ==

MPI SUM) ;

a s s e r t ( i s p o f 2 ( s i z e ) ) ;

st r uc t m y a l lr e du ce ∗ p = ma l l o c ( s i z e o f (∗ p ) ) ;

p−>buf = r ec v b u f ;

p−>co unt = cou nt ;

p−>tmp buf = m al l o c ( co u nt ∗ si z e o f ( i n t ) ) ;

p−>r e q s [ 0 ] = p−>r e q s [ 1 ] = MPI REQUEST NULL ;

p−>comm = comm ;

p−>ran k = rank ;

p−> s i z e = s i z e ;

p−>mask = 1 ;

p−>t a g = MYALLREDUCE TAG;

b o o l done = f a l s e ;

p−>d on e p t r = &done ;

MPIX As yn c st ar t ( m y a l l r e d u c e p o l l , p , MPIX STREAM NULL) ;

while ( ! done ) MPIX St rea m pro gre ss (MPIX STREAM NULL) ;

}

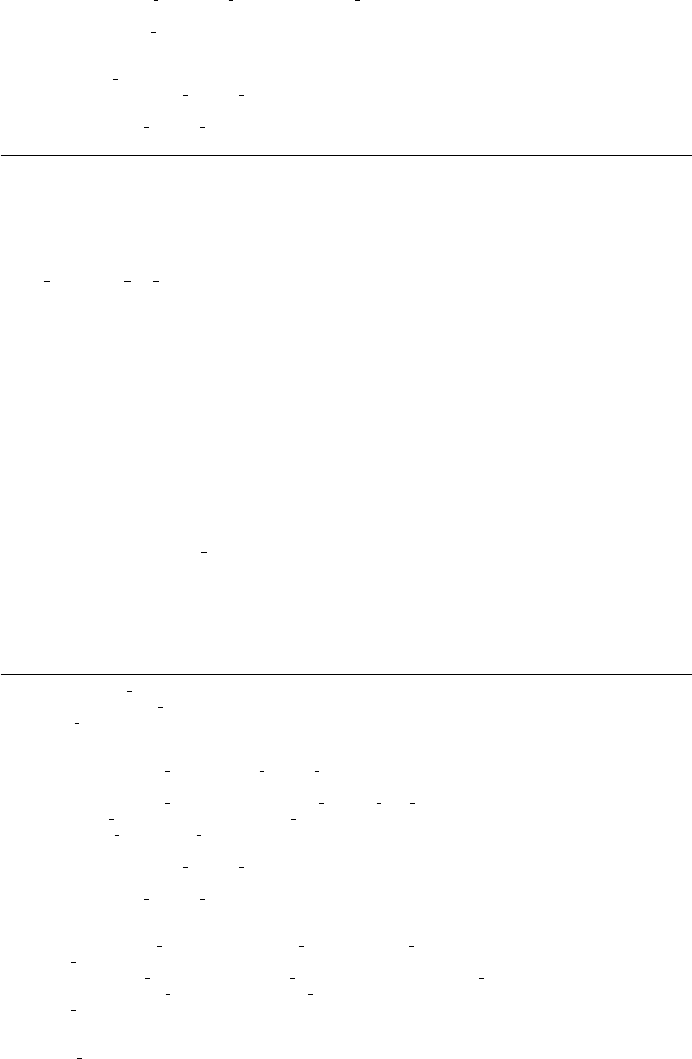

To compare the performance of this custom user-level allreduce against MPICH’s

MPI Iallreduce using the same recursive doubling algorithm, we conducted

experiments on the Bebop cluster at Argonne National Laboratory Computing

Resource Center. The experiment measures the latency of allreduce of a single

integer. The results are shown in Figure 13.

The custom user-level implementation actually outperforms MPICH’s native

MPI Iallreduce. We believe this is due to the specific assumptions and shortcuts

23

in the custom implementation. For example, we assume the number of processes

is a power of 2 and that sendbuf is MPI INPLACE, which avoids certain checks

and branches. Additionally, restricting to MPI INT and MPI SUM avoids a datatype

switch and the function-call overhead of calling an operation function.

This highlights an advantage of custom code over an optimized MPI im-

plementation: the former can leverage specific contexts from the application to

avoid complexities and achieve greater efficiency.

Fig. 13. Custom single-integer allreduce latency vs MPI Iallreduce. Intel(R) Xeon(R)

CPU E5-2695 v4 @ 2.10GHz. Nodes interconnect via Omni-Path Fabric. One process

per node.

5 Related Work

Several related works address issues with MPI progress and the management of

asynchronous tasks within MPI. Here, we provide a brief review and compare

these approaches with our proposed methods.

5.1 MPICH Asynchronous Progress

With MPICH and its derived MPI implementations, users can set an environ-

ment variable, such as MPIR CVAR ASYNC PROGRESS, to enable a dedicated back-

ground thread to poll progress in a busy loop. This is the simplest method for

ensuring that MPI will always progress and complete any nonblocking operations

after they are initiated, without requiring additional MPI calls from the appli-

cation. However, this method has significant performance issues and is generally

not recommended.

The first issue is lock contention between the progress thread and the main

thread. Enabling async progress forces the use of the MPI THREAD MULTIPLE

thread level, where all MPI operations require lock protection for thread safety.

Because the async progress thread constantly tries to make progress on opera-

tions, it creates latency overhead for all MPI calls due to lock contention. On

many systems, thread locks are implemented using unfair mutexes, which can

24

lead to a lock monopoly issue. In this scenario, a thread that releases a lock and

immediately tries to reacquire it has a better chance of getting the lock than

another thread trying to acquire the same lock. Consequently, MPI calls from

the main thread may experience disproportionate latency due to the difficulty

of acquiring the lock from the async thread. Although adopting a fair mutex

implementation may alleviate this issue, the lock contention overhead remains

significant, especially for small messages.

Secondly, the async progress option adds one progress thread for every MPI

process. In an oversubscribed situation, where there are as many MPI processes

on a single node as there are cores, the extra async thread significantly slows

down the application due to CPU core sharing among all threads.

MVAPICH [10] has proposed a design to address these issues by identifying

scenarios where asynchronous progress is required and putting the async thread

to sleep when it is not required or beneficial. Their benchmarks showed up to a

60

With the extension MPIX Stream progress, applications can easily imple-

ment the async progress thread within the application layer. The same tuning

design as that adopted in MVAPICH can be applied. Instead of the implementa-

tion detecting where async progress is required, the application can know where

it is needed by design, thus controlling the progress thread more precisely and

directly. MPIX Stream progress also allows applications to use MPIX streams

to target async progress for specific contexts, thereby avoiding lock contention

issues altogether. In contrast, it is difficult for an MPI implementation to detect

user contexts or determine the scope of global progress.

5.2 Generalized Request

MPI includes an API called generalized requests, which allows users to wrap

an asynchronous task into an MPI request, enabling it to be managed simi-

larly to an MPI nonblocking operation. A generalized request is created via

MPI

Grequest start:

int MP I_ Gr eq ue st _s t a r t ( query_fn , fre e_fn , canc el_fn , ext ra_s tate , r equ est )

MPI interfaces with the user async tasks via three callbacks: query fn,

free fn, and cancel fn. query fn is called to fill in an MPI status after the

request is completed, free fn is called when the request is freed, and cancel fn

is called when the request is canceled.

While the generalized request behaves like a real MPI request–i.e., it can be

passed to MPI Test or MPI Wait–it does not fulfill the primary purpose of users

calling MPI Test or MPI Wait, which is to poke progress on the nonblocking oper-

ation behind the request. There is no built-in progress mechanism for generalized

requests; users are expected to progress the async task behind the generalized

request outside of MPI. This raises questions about the usefulness of generalized

requests. Since users don’t rely on MPI to progress the task or query its status,

a generalized MPI request is merely a redundant handle. Indeed, a large-scale

survey of MPI applications [6] found no usages of generalized requests. The few

25

works in the literature that use generalized requests are all for implementing

MPI APIs[7,3] on top of a core implementation, necessitating the use of MPI

requests. Interestingly, both works highlighted the lack of progress semantics

in generalized requests and proposed adding a progress-polling callback to the

interface.

We believe the MPIX async extension proposed in this paper perfectly com-

plements the generalized request by providing a progression mechanism. We will

demonstrate this case with an example in the following section 4.6.

5.3 MPIX Schedule

MPIX Schedule[11] is a proposal to expose MPI’s internal nonblocking collective

system to applications so that users can create their own nonblocking collective

algorithms or a series of coordinated MPI operations similar to a nonblocking

MPI collective. The proposal includes the following APIs:

int MP IX _S ch ed u le _c re at e ( M PI X_ Sch ed ul e * sc hedule , int a uto _f ree );

int MP IX _S c he du l e _ ad d_ o pe ra ti o n ( MP IX _S ch ed ul e schedul e , M PI _Re qu es t request ,

int aut o_f re e ) ;

int MP IX _ Sc he du l e_ ad d _m pi _ op er a ti on ( MPIX _S ch ed ul e schedu le , MP I_O p op , void *

invec , void * inoutvec , int len , M PI _D at at yp e da tat ype );

int MP IX _ S c he du l e_ ma r k_ re s et _p oi n t ( MP IX _S ch ed ul e sc hed ul e ) ;

int MP IX _ Sc he d ul e_ m ar k_ c om pl e ti on _ po in t ( M PI X_ Sc he du le s che du le );

int MP IX _S c he du le _ cr ea te _ ro un d ( M PI X_ Sc he d ul e s che dul e );

int MP IX _S ch ed u le _c om mi t ( M PI X_ Sch ed ul e sched ule , M PI _R eq ues t * requ est );

int MP IX _S ch ed ul e_ f r e e ( MP IX _S ch ed ul e * sche dul e );

These APIs are designed for a specific implementation of persistent non-

blocking collectives, which includes a set of operations for initiation, a set of

operations for finalization, and rounds of operations for the repeated invocation

of the algorithm.

However, it is challenging for MPIX Schedule to accommodate non-MPI op-

erations. The proposal only has APIs to add operations represented as an MPI

request or an MPI op. While it is possible to wrap a custom asynchronous op-

eration via a generalized request, the usage is cumbersome. More critically, the

lack of a progress mechanism limits the performance users can achieve compared

to what is possible within an MPI implementation.

In contrast, the MPIX Async APIs address the root issue of providing in-

teroperable MPI progress. Its simple yet flexible progress hook-based interface

can accommodate arbitrary asynchronous tasks. Combined with generalized re-

quests, it enables users to effectively experiment with MPI extensions, such as

experimental collective algorithms, entirely from the application layer.

26

5.4 MPIX Continue

MPIX Continue [12] is a proposal that allows MPI to call back a user-defined

function upon the completion of a request, eliminating the need for the applica-

tion to continuously test the request for completion.

The proposal consists of the following APIs:

int MP IX _C on ti nu e_ i n i t ( MP I_ Req ue st * cont_ req , M PI_ In fo info );

int MPI X_ Co nt in ue ( M PI _R equ es t * op_req uest , int * flag ,

MP IX _C o nt in ue _ cb _f un c ti on *cb , voi d * cb_dat a , MP I_ St atu s * status ,

MPI _R eq ue st c ont _r eq );

int MP I X _C on ti nu ea ll ( int count , M PI _Re qu es t op _r eq ues t [] , int * flag ,

MP IX _C o nt in ue _ cb _f un c ti on *cb , voi d * cb_dat a , MP I_ St atu s st at use s [] ,

MPI _R eq ue st c ont _r eq );

The motivation behind the MPIX Continue APIs is to simplify the man-

agement of MPI requests within a task-based programming model. Task-based

programming models often have their own task tracking, scheduling, and progres-

sion mechanisms. However, MPI’s nonblocking interface requires applications to

actively test or wait on individual requests, which can lead to thread contention

and wasted CPU cycles due to redundant progression.

Our proposed extensions address directly many of the challenges that

the MPIX Continue proposal seeks to resolve. MPIX Stream progress can

be used to implement a polling service that integrates with the task run-

time system without requiring synchronization of MPI requests. Meanwhile,

MPIX Request is complete allows tasks to query the status of their dependent

requests without triggering redundant progress invocations.

The MPIX Continue proposal adopts an event-driven callback interface. As

shown in section 4.5, MPIX Async extension can be used to implement a poor

man’s version of callbacks on request completion. It is less efficient than the

MPIX Continue proposal since the latter can be implemented within an imple-

mentation’s internal progress rather than active queries in a separate progress

hook. However, the overhead should be negligible until the number of registered

MPI requests becomes significant.

On the other hand, because the MPIX Continue is integrated within the

MPI progress engine, there can be issues, including callback latency interference,

thread contention, and potential recursive context, that are complex and difficult

to address due to the opaqueness of MPI progress. In comparison, MPIX Async

extension provides the explicitness and flexibility for applications to address such

issues.

6 Summary

In summary, we present a suite of MPI extensions crafted to provide an in-

teroperable MPI progress, which grants applications explicit control over MPI

progress management, the ability to incorporate user-defined asynchronous tasks

into MPI, and seamless integration with task-based and event-driven program-

ming models. The MPIX Stream progress and MPIX Request is complete APIs

27

effectively decouple the context for invoking MPI progress and querying the

completion status of MPI requests, thereby circumventing synchronization com-

plexities and task-engine interference. Meanwhile, the MPIX Async proposal

empowers applications to integrate custom progress hooks into MPI progress,

enabling them to harness MPI progress and extend MPI functionality from the

user layer. Through examples and micro-benchmark testing, we demonstrate

the effectiveness of these extensions in bringing MPI to modern asynchronous

programming.

Acknowledgments. This research was supported by the U.S. Department of

Energy, Office of Science, under Contract DE-AC02-06CH11357.

References

1. Castillo, E., Jain, N., Casas, M., Moreto, M., Schulz, M., Beivide, R., Valero,

M., Bhatele, A.: Optimizing computation-communication overlap in asynchronous

task-based programs. In: Proceedings of the ACM International Conference on

Supercomputing. pp. 380–391 (2019)

2. Haller, P., Odersky, M.: Event-based programming without inversion of control.

In: Joint Modular Languages Conference. pp. 4–22. Springer (2006)

3. Hatanaka, M., Takagi, M., Hori, A., Ishikawa, Y.: Offloaded MPI persistent col-

lectives using persistent generalized request interface. In: Proceedings of the 24th

European MPI Users’ Group Meeting. pp. 1–10 (2017)

4. Hoefler, T., Lumsdaine, A.: Message progression in parallel computing – to thread

or not to thread? In: 2008 IEEE International Conference on Cluster Computing.

pp. 213–222. IEEE (2008)

5. Holmes, D.J., Skjellum, A., Schafer, D.: Why is MPI (perceived to be) so complex?:

Part 1 – does strong progress simplify MPI? In: Proceedings of the 27th European

MPI Users’ Group Meeting. pp. 21–30 (2020)

6. Laguna, I., Marshall, R., Mohror, K., Ruefenacht, M., Skjellum, A., Sultana, N.: A

large-scale study of MPI usage in open-source HPC applications. In: Proceedings

of the International Conference for High Performance Computing, Networking,

Storage and Analysis. pp. 1–14 (2019)

7. Latham, R., Gropp, W., Ross, R., Thakur, R.: Extending the MPI-2 generalized

request interface. In: Recent Advances in Parallel Virtual Machine and Message

Passing Interface: 14th European PVM/MPI User’s Group Meeting, Paris, France,

September 30-October 3, 2007. Proceedings 14. pp. 223–232. Springer (2007)

8. Okur, S., Hartveld, D.L., Dig, D., Deursen, A.v.: A study and toolkit for asyn-

chronous programming in C#. In: Proceedings of the 36th International Confer-

ence on Software Engineering. pp. 1117–1127 (2014)

9. Ruefenacht, M., Bull, M., Booth, S.: Generalisation of recursive doubling for allre-

duce: Now with simulation. Parallel Computing 69, 24–44 (2017)

10. Ruhela, A., Subramoni, H., Chakraborty, S., Bayatpour, M., Kousha, P., Panda,

D.K.: Efficient design for MPI asynchronous progress without dedicated resources.

Parallel Computing 85, 13–26 (2019)

11. Schafer, D., Ghafoor, S., Holmes, D., Ruefenacht, M., Skjellum, A.: User-level

scheduled communications for MPI. In: 2019 IEEE 26th International Conference

on High Performance Computing, Data, and Analytics (HiPC). pp. 290–300. IEEE

(2019)

28

12. Schuchart, J., Samfass, P., Niethammer, C., Gracia, J., Bosilca, G.: Callback-based

completion notification using MPI continuations. Parallel Computing 106, 102793

(2021)

13. Sergent, M., Dagrada, M., Carribault, P., Jaeger, J., P´erache, M., Papaur´e, G.:

Efficient communication/computation overlap with MPI+OpenMP runtimes col-

laboration. In: Euro-Par 2018: Parallel Processing: 24th International Conference

on Parallel and Distributed Computing, Turin, Italy, August 27-31, 2018, Proceed-

ings 24. pp. 560–572. Springer (2018)

14. Thakur, R., Gropp, W., Lusk, E.: On implementing MPI-IO portably and with

high performance. In: Proceedings of the sixth workshop on I/O in parallel and

distributed systems. pp. 23–32 (1999)

15. Zhou, H., Raffenetti, K., Guo, Y., Thakur, R.: MPIX Stream: An explicit solution

to hybrid MPI+X programming. In: Proceedings of the 29th European MPI Users’

Group Meeting. pp. 1–10 (2022)

29